Learning with Music Signals: Technology Meets Education (LEARN)

The core mission of the LEARN project is to approach and explore the concept of learning from different angles using music as a challenging and instructive application domain. The project is funded by the German Research Foundation as part of the Reinhart Koselleck Programme. On this website, we summarize the project's main objectives and provide links to project-related resources (data, demonstrators, websites) and publications.

- Principal investigator: Prof. Dr. Meinard Müller

- Programme period: 2023–2029

- Grant No. 500643750 (MU 2686/15-1)

- Programme type: Reinhart Koselleck Project

Project Description

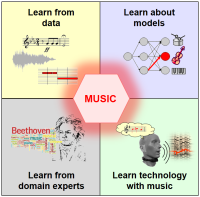

Learning with Music Signals: Technology Meets Education

The revolution in music distribution, storage, and consumption has fueled tremendous interest in developing techniques and tools for organizing, analyzing, retrieving, and presenting music-related data. As a result, the field of music information retrieval (MIR) has matured over the last 20 years into an independent research area related to many different disciplines, including signal processing, machine learning, information retrieval, musicology, and the digital humanities. This project aims to break new ground in technology and education in these disciplines using music as a challenging and instructive multimedia domain. The project is unique in its way of approaching and exploring the concept of learning from different angles. First, learning from data, we will build on and advance recent deep learning (DL) techniques for extracting complex features and hidden relationships directly from raw music signals. Second, by learning from the experience of traditional engineering approaches, our objective is to understand better existing and to develop more interpretable DL-based systems by integrating prior knowledge in various ways. In particular, as a novel strategy with great potential, we want to transform classical model-based MIR approaches into differentiable multilayer networks, which can then be blended with DL-based techniques to form explainable hybrid models that are less vulnerable to data biases and confounding factors. Third, in collaboration with domain experts, we will consider specialized music corpora to gain a deeper understanding of both the music data and our models' behavior while exploring the potential of computational models for musicological research. Fourth, we will examine how music may serve as a motivating vehicle to make learning in technical disciplines such as signal processing or machine learning an interactive pursuit. Through our holistic approach to learning, we want to achieve significant advances in the development of explainable hybrid models and reshape how recent technology is applied and communicated in interdisciplinary research and education.

Projektbeschreibung

Lernen mit Musiksignalen: Technologie trifft Ausbildung

Die erheblichen Fortschritte in der Art, wie wir Musik verbreiten, speichern und nutzen, haben ein großes Interesse an der Entwicklung von Techniken und Werkzeugen zum Organisieren, Analysieren, Abrufen, Suchen und Präsentieren musikbezogener Daten hervorgerufen. Infolgedessen hat sich das Gebiet des Music Information Retrieval (MIR) in den letzten 20 Jahren zu einem eigenständigen Forschungsgebiet mit Bezug zu ganz unterschiedlichen Disziplinen wie der Signalverarbeitung, dem Maschinellen Lernen, des Information Retrieval, den Musikwissenschaften und den Digital Humanities entwickelt. Dieses Projekt hat zum Ziel, neue Wege in der Technologieentwicklung und Ausbildung in diesen Disziplinen zu beschreiten, wobei die Musik als herausfordernde und instruktive Domäne multimedialer Daten dient. Die Einzigartigkeit des Projekts besteht darin, dass wir uns dem Konzept des Lernens aus verschiedenen Blickwinkeln annähern. Erstens werden wir neuartige Techniken des maschinellen Lernens, basierend auf Deep Learning (DL), erforschen, um komplexe Merkmale und verborgene Beziehungen direkt aus den Musiksignalen zu extrahieren. Zweitens besteht unser Ziel darin, aus den Erfahrungen traditioneller Ingenieursansätze zu lernen, um zum einen bestehende DL-basierte Systeme besser zu verstehen und zum anderen durch Integration von Vorwissen interpretierbarere Systeme zu entwickeln. Als neuartige Strategie mit großem Potenzial wollen wir insbesondere klassische modellbasierte MIR-Ansätze in differenzierbare Multilayer-Netzwerke überführen. Diese sollen dann mit DL-basierten Techniken zu erklärbaren Hybridmodellen, die weniger anfällig für Ungleichgewichte in den Daten (data bias) und Störfaktoren (confounding factors) sind, fusioniert werden. Drittens werden wir in Zusammenarbeit mit Domänenexperten spezialisierte Musikkorpora betrachten, um ein tieferes Verständnis sowohl der Musikdaten als auch des Verhaltens unserer Modelle zu erlangen und gleichzeitig das Potenzial von computerbasierten Methoden für die musikwissenschaftliche Forschung zu untersuchen. Viertens soll uns die Musik als motivierendes Medium dienen, um das Lernen in technischen Disziplinen wie der Signalverarbeitung oder dem maschinellen Lernen interaktiv zu gestalten. Durch unseren ganzheitlichen Ansatz des Lernens wollen wir nicht nur erhebliche Fortschritte bei der Entwicklung erklärbarer Hybridmodelle erzielen, sondern auch die Anwendung und Vermittlung neuer Technologien in interdisziplinärer Forschung und Lehre von Grund auf umgestalten.

Projected-Related Activities

- Invited Talk (Meinard Müller): Beethoven.exe: How Machines Listen, Learn, and Remix Music, Weihnachtskolloquium der Fakultät für Mathematik und Informatik, Julius-Maximilians-Universität Würzburg, December 16, 2025

- Invited Talk (Meinard Müller): Loss Functions Matter: Three Case Studies in Informed Loss Design, Lecture Series Musical Informatics, Johannes Kepler Universität Linz, November 19, 2025

- Tutorial (Meinard Müller, Johannes Zeitler): Differentiable Alignment Techniques for Music Processing, International Society for Music Information Retrieval Conference (ISMIR), Daejeon, South Korea, September 21, 2025

- Invited Talk (Meinard Müller): Analyzing the Musical Scene, Symposium TU(R)NING SPACE – Analysis, synthesis and perception of kinetic auditory space in music, art and hearing science, Technical University of Munich (TUM), July 4, 2025

- Invited Talk (Meinard Müller): Digitale Musikverarbeitung: Vom Scheitern Lernen, Ringvorlesung Transdisziplinäre Aspekte Digitaler Methodik in den Geistes- und Kulturwissenschaften, Johannes Gutenberg-Universität Mainz, July 2, 2025

- Invited Talk (Meinard Müller): Loss Functions Matter: Three Case Studies in Informed Loss Design, Workshops of the Audio Data Analysis and Signal Processing (ADASP) Group, Télécom Paris, June 12, 2025

- Invited Talk (Meinard Müller): Learning with Music Signals: Technology Meets Education, Visualisierungskolloquium, VISUS, Universität Stuttgart, January 24, 2025

- Invited Talk (Meinard Müller): Automatische Erschließung von Musikdaten, Collegium Alexandrinum, Friedrich-Alexander-Universität Erlangen-Nürnberg, January 16, 2025

- Invited Talk (Meinard Müller): Digitale Musikverarbeitung: Vom Scheitern Lernen, Workshop: Digitalität & Kulturelle Resilienz: Afghanische Musik in der Diaspora, UNESCO Chair in Digital Culture and Arts in Education, Friedrich-Alexander-Universität Erlangen-Nürnberg, January 9, 2025

- Tutorial (Meinard Müller, Masataka Goto, Jin Ha Lee): Exploring 25 Years of Music Information Retrieval: Perspectives and Insights, International Society for Music Information Retrieval Conference (ISMIR), San Francisco, USA, November 10, 2024

- Invited Talk (Peter Meier): Real-Time Beat Tracking and Control Signal Generation for Interactive Music Applications, Centre for Digital Music Seminar Series (C4DM) Seminar, Queen Mary University of London, September 9, 2024

- Dagstuhl Seminar (organized by Meinard Müller, Cynthia Liem, Brian McFee): Learning with Music Signals: Technology Meets Education, Leibniz-Zentrum für Informatik, Wadern, Germany, July 21–25, 2024

- Invited Lecture (Meinard Müller): Automatische Erschließung von Musikdaten, FAU Scientia Vorlesungsreihe, Friedrich-Alexander-Universität Erlangen-Nürnberg, June 5, 2024

- Tutorial (Meinard Müller): Learning with Music Signals: Technology Meets Education, International Society for Music Information Retrieval Conference (ISMIR), Milan, Italy, November 25, 2023

- Tutorial (Meinard Müller): Learning with Music Signals: Technology Meets Education, Eurographics (EG), Saarbrücken, Germany, May 8, 2023

Projected-Related Resources and Demonstrators

The following list provides an overview of the most important publicly accessible sources created in the LEARN project:

- Demo website for the IEEE/ACM TASLPRO paper, 2025

- Demo website for the SMC paper, 2025

- Demo website for the DAFx paper, 2024

- Demo website for the Dagstuhl music game, 2024

Projected-Related Publications

The following publications reflect the main scientific contributions of the work carried out in the LEARN project.

- Sebastian Strahl and Meinard Müller

dYIN and dSWIPE: Differentiable Variants of Classical Fundamental Frequency Estimators

IEEE/ACM Transactions on Audio, Speech, and Language Processing, 33, 2025. PDF Details Demo Code DOI@article{StrahlM25_df0_TASLP, author = {Sebastian Strahl and Meinard M{\"u}ller}, title = {{dYIN} and {dSWIPE}: {D}ifferentiable Variants of Classical Fundamental Frequency Estimators}, journal = {{IEEE}/{ACM} Transactions on Audio, Speech, and Language Processing}, volume = {33}, number={}, pages = {}, year = {2025}, doi = {10.1109/TASLPRO.2025.3581119}, url-details = {https://ieeexplore.ieee.org/document/11044787}, url-demo = {https://audiolabs-erlangen.de/resources/MIR/2025-TASLPRO-dYIN-dSWIPE}, url-code = {https://github.com/groupmm/df0}, url-pdf = {2025_StrahlM_df0_TASLP_ePrint.pdf} } - Ben Maman, Johannes Zeitler, Meinard Müller, and Amit H. Bermano

Multi-Aspect Conditioning for Diffusion-Based Music Synthesis: Enhancing Realism and Acoustic Control

IEEE/ACM Transactions on Audio, Speech, and Language Processing, 33: 68–81, 2025. PDF Details Demo DOI@article{MamanZMB25_DiffusionMusic_TASLP, author = {Ben Maman and Johannes Zeitler and Meinard M{\"u}ller and Amit H. Bermano}, title = {Multi-Aspect Conditioning for Diffusion-Based Music Synthesis: {E}nhancing Realism and Acoustic Control}, journal = {{IEEE}/{ACM} Transactions on Audio, Speech, and Language Processing}, volume = {33}, number={}, pages = {68--81}, year = {2025}, doi = {10.1109/TASLP.2024.3507553}, url-details = {https://ieeexplore.ieee.org/document/10771710}, url-demo = {https://benadar293.github.io/multi-aspect-conditioning}, url-pdf = {2025_MamanZMB_DiffusionMusic_TASLP_ePrint.pdf} } - Meinard Müller, Stefan Balke, and Masataka Goto

The Story Behind the RWC Music Database: An Interview with Masataka Goto

Transaction of the International Society for Music Information Retrieval (TISMIR), 8(1): 154–164, 2025. PDF Details Demo DOI@article{MuellerBG25_InterviewGotoRWC_TISMIR, author = {Meinard M{\"u}ller and Stefan Balke and Masataka Goto}, title = {The Story Behind the {RWC} Music Database: {A}n Interview with {M}asataka {G}oto}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {8}, number = {1}, pages = {154--164}, year = {2025}, doi = {10.5334/tismir.261}, url-pdf = {2025_MuellerBG_InterviewGotoRWC_TISMIR_ePrint.pdf}, url-demo = {https://www.youtube.com/playlist?list=PLpaL3fT5fH2ohNCY3nw_k63H7ozkixvp5}, url-details={https://transactions.ismir.net/articles/10.5334/tismir.261} } - Charles Ballester, Baptiste Bacot, Louis Bigo, Vanessa Nina Borsan, Louis Couturier, Ken Déguernel, Quentin Dinel, Laurent Feisthauer, Klaus Frieler, Mark Gotham, Richard Groult, Johannes Hentschel, Alexandre d'Hooge, Dinh-Viet-Toan Le, Florence Levé, Francesco Maccarini, Ivana Maričić, Gianluca Micchi, Meinard Müller, Alexandros Stamatiadis, Tom Taffin, Patrice Thibaud, Christof Weiß, Rui Yang, Emmanuel Leguy, and Mathieu Giraud

Interacting with Annotated and Synchronized Music Corpora on the Dezrann Web Platform

Transaction of the International Society for Music Information Retrieval (TISMIR), 8(1): 121–139, 2025. PDF Details Demo DOI@article{BallesterEtAl25_Dezrann_TISMIR, author = {Charles Ballester and Baptiste Bacot and Louis Bigo and Vanessa Nina Borsan and Louis Couturier and Ken Déguernel and Quentin Dinel and Laurent Feisthauer and Klaus Frieler and Mark Gotham and Richard Groult and Johannes Hentschel and Alexandre d'{H}ooge and Dinh-Viet-Toan Le and Florence Levé and Francesco Maccarini and Ivana Maričić and Gianluca Micchi and Meinard M{\"u}ller and Alexandros Stamatiadis and Tom Taffin and Patrice Thibaud and Christof Wei{\ss} and Rui Yang and Emmanuel Leguy and Mathieu Giraud}, title = {Interacting with Annotated and Synchronized Music Corpora on the {D}ezrann Web Platform}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {8}, number = {1}, pages = {121--139}, year = {2025}, doi = {10.5334/tismir.212}, url-pdf = {2025_BallesterEtAl_Dezrann_TISMIR_ePrint.pdf}, url-demo = {https://doc.dezrann.net/status}, url-details={https://transactions.ismir.net/articles/10.5334/tismir.212} } - Stefan Balke, Axel Berndt, and Meinard Müller

ChoraleBricks: A Modular Multitrack Dataset for Wind Music Research

Transaction of the International Society for Music Information Retrieval (TISMIR), 8(1): 39–54, 2025. PDF Details Demo DOI@article{BalkeBM25_ChoraleBricks_TISMIR, author = {Stefan Balke and Axel Berndt and Meinard M{\"u}ller}, title = {{ChoraleBricks}: {A} Modular Multitrack Dataset for Wind Music Research}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {8}, number = {1}, pages = {39--54}, year = {2025}, doi = {10.5334/tismir.252}, url-pdf = {2025_BalkeBM_ChoraleBricks_TISMIR_ePrint.pdf}, url-demo = {https://www.audiolabs-erlangen.de/resources/MIR/2025-ChoraleBricks}, url-details={https://transactions.ismir.net/articles/10.5334/tismir.252} } - Ching-Yu Chiu, Lele Liu, Christof Weiß, and Meinard Müller

Cross-Modal Approaches to Beat Tracking: A Case Study on Chopin Mazurkas

Transaction of the International Society for Music Information Retrieval (TISMIR), 8(1): 55–69, 2025. PDF Details DOI@article{ChiuLWM25_BeatTrackingCrossModal_TISMIR, author = {Ching-Yu Chiu and Lele Liu and Christof Wei{\ss} and Meinard M{\"u}ller}, title = {Cross-Modal Approaches to Beat Tracking: {A} Case Study on {C}hopin {M}azurkas}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {8}, number = {1}, pages = {55--69}, year = {2025}, doi = {10.5334/tismir.238}, url-pdf = {2025_ChiuLWM_BeatTrackingCrossModal_TISMIR_ePrint.pdf}, url-details={https://transactions.ismir.net/articles/10.5334/tismir.238} } - Benjamin Henzel, Meinard Müller, and Christof Weiß

Style Evolution of Western Choral Music: A corpus-based Strategy

Computational Humanities Research (CHR), 1: 1–13, 2025. PDF DOI@article{HenzelMW25_StyleEvolution_CHR, author = {Benjamin Henzel and Meinard M{\"u}ller and Christof Wei{\ss}}, title = {Style Evolution of {W}estern Choral Music: {A} corpus-based Strategy}, journal = {Computational Humanities Research ({CHR})}, pulisher = {Cambridge University Press}, volume = {1}, pages = {1--13}, year = {2025}, doi = {10.1017/chr.2025.10016}, url-pdf = {2025_HenzelMW_StyleEvolution_CHR_ePrint.pdf}, } - Hans-Ulrich Berendes, Ben Maman, and Meinard Müller

Tuning Matters: Analyzing Musical Tuning Bias in Neural Vocoders

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 166–173, 2025. PDF DOI@inproceedings{BerendesMM25_TuningMatters_ISMIR, author = {Hans-Ulrich Berendes and Ben Maman and Meinard M{\"u}ller}, title = {Tuning Matters: {A}nalyzing Musical Tuning Bias in Neural Vocoders}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, pages = {166--173}, address = {Daejeon, Korea}, year = {2025}, doi = {doi.org/10.5281/zenodo.17706359}, url-pdf = {2025_BerendesMM_TuningMatters_ISMIR_ePrint.pdf} } - Ching-Yu Chiu, Sebastian Strahl, and Meinard Müller

dPLP: A Differentiable Version of Predominant Local Pulse Estimation

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 198–205, 2025. PDF DOI@inproceedings{ChiuSM25_dPLP_ISMIR, author = {Ching-Yu Chiu and Sebastian Strahl and Meinard M{\"u}ller}, title = {{dPLP}: A Differentiable Version of Predominant Local Pulse Estimation}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, pages = {198--205}, address = {Daejeon, Korea}, year = {2025}, doi = {doi.org/10.5281/zenodo.17706373}, url-pdf = {2025_ChiuSM_dPLP_ISMIR_ePrint.pdf} } - Jonathan Yaffe, Ben Maman, Meinard Müller, and Amit Bermano

Count the Notes: Histogram-Based Supervision for Automatic Music Transcription

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 469–476, 2025. PDF DOI@inproceedings{YaffeMMB25_CountNotes_ISMIR, author = {Jonathan Yaffe and Ben Maman and Meinard M{\"u}ller and Amit Bermano}, title = {Count the Notes: {H}istogram-Based Supervision for Automatic Music Transcription}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, pages = {469--476}, address = {Daejeon, Korea}, year = {2025}, doi = {doi.org/10.5281/zenodo.17706488}, url-pdf = {2025_YaffeMMB_CountNotes_ISMIR_ePrint.pdf} } - Johannes Zeitler and Meinard Müller

Reformulating Soft Dynamic Time Warping: Insights into Target Artifacts and Prediction Quality

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 127–133, 2025. PDF DOI@inproceedings{ZeitlerM25_ReformulateSoftDTW_ISMIR, author = {Johannes Zeitler and Meinard M{\"u}ller}, title = {Reformulating Soft Dynamic Time Warping: {I}nsights into Target Artifacts and Prediction Quality}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, pages = {127--133}, address = {Daejeon, Korea}, year = {2025}, doi = {doi.org/10.5281/zenodo.17706349}, url-pdf = {2025_ZeitlerM_ReformulateSoftDTW_ISMIR_ePrint.pdf} } - Meinard Müller, Cynthia Liem, Brian McFee, and Simon Schwär

Learning with Music Signals: Technology Meets Education (Dagstuhl Seminar 24302)

Dagstuhl Reports, 14(7): 115–152, 2025. PDF Details DOI@article{MuellerLMS25_LearningMusicSignals_ReportDagstuhl, author = {Meinard M{\"u}ller and Cynthia Liem and Brian McFee and Simon Schw{\"a}r}, title = {Learning with Music Signals: {T}echnology Meets Education ({D}agstuhl {S}eminar 24302)}, pages = {115--152}, journal = {Dagstuhl Reports}, ISSN = {2192-5283}, year = {2025}, volume = {14}, number = {7}, publisher = {Schloss Dagstuhl -- Leibniz-Zentrum f{\"u}r Informatik}, address = {Dagstuhl, Germany}, doi = {10.4230/DagRep.14.7.115}, url-pdf = {2025_MuellerLMS_LearningMusicSignals_ReportDagstuhl.pdf}, url-details={https://www.dagstuhl.de/24302} } - Timothy Tsai, Kavi Dey, Yigitcan Özer, and Meinard Müller

Dense—Sparse Dynamic Time Warping for Customizing Piano Concerto Accompaniments

In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP): 1–5, 2025. PDF Details Demo DOI@inproceedings{TsaiDOM25_DenseSparseDTW_ICASSP, author = {Timothy Tsai and Kavi Dey and Yigitcan {\"O}zer and Meinard M{\"u}ller}, title = {Dense--Sparse {D}ynamic {T}ime {W}arping for Customizing Piano Concerto Accompaniments}, booktitle = {Proceedings of the {IEEE} International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {San Francisco, CA, United States}, year = {2025}, pages = {1--5}, doi = {10.1109/ICASSP49660.2025.10890080}, url-details = {https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=10890080}, url-demo = {https://github.com/HMC-MIR/PianoConcertoAccompaniment}, url-pdf = {2025_TsaiDOM_DenseSparseDTW_ICASSP_preprint.pdf} } - Peter Meier, Meinard Müller, and Stefan Balke

Analyzing Pitch Estimation Accuracy in Cross-Talk Scenarios: A Study with Wind Instruments

In Proceedings of the Sound and Music Computing Conference (SMC): 3–10, 2025. PDF Details Demo DOI@inproceedings{MeierMB25_WindPitchEstimation_SMC, author = {Peter Meier and Meinard M{\"u}ller and Stefan Balke}, title = {Analyzing Pitch Estimation Accuracy in Cross-Talk Scenarios: {A} Study with Wind Instruments}, booktitle = {Proceedings of the Sound and Music Computing Conference ({SMC})}, address = {Graz, Austria}, year = {2025}, pages = {3--10}, doi = {10.5281/zenodo.15835032}, url-pdf = {2025_MeierMB_WindPitchEstimation_SMC.pdf}, url-demo = {https://www.audiolabs-erlangen.de/resources/MIR/2025-SMC-PitchCrosstalk}, url-details={https://zenodo.org/records/15835033} } - Sebastian Strahl, Yigitcan Özer, Hans-Ulrich Berendes, and Meinard Müller

Hearing Your Way Through Music Recordings: A Text Alignment and Synthesis Approach

In Proceedings of the Sound and Music Computing Conference (SMC): 65–72, 2025. PDF Demo Code DOI@inproceedings{StrahlOBM25_TextAlignSynthesis_SMC, author = {Sebastian Strahl and Yigitcan {\"O}zer and Hans-Ulrich Berendes and Meinard M{\"u}ller}, title = {Hearing Your Way Through Music Recordings: {A} Text Alignment and Synthesis Approach}, booktitle = {Proceedings of the Sound and Music Computing Conference ({SMC})}, pages = {65--72}, address = {Graz, Austria}, year = {2025}, doi = {10.5281/zenodo.15839750}, url-demo = {https://audiolabs-erlangen.de/resources/MIR/2025-SMC-TextAlignSynth}, url-code = {https://github.com/groupmm/textalignsynth}, url-pdf = {2025_StrahlOBM_TextAlignSynthesis_SMC_ePrint.pdf} } - Peter Meier, Sebastian Strahl, Simon Schwär, Meinard Müller, and Stefan Balke

Pitch Estimation in Real Time: Revisiting SWIPE with Causal Windowing

In Proceedings of the International Symposium on Computer Music Multidisciplinary Research (CMMR): 285–297, 2025. PDF Demo Code DOI@inproceedings{MeierSSMB25_RealTimeSWIPE_CMMR, author = {Peter Meier and Sebastian Strahl and Simon Schw{\"a}r and Meinard M{\"u}ller and Stefan Balke}, title = {Pitch Estimation in Real Time: {R}evisiting {SWIPE} with Causal Windowing}, booktitle = {Proceedings of the International Symposium on Computer Music Multidisciplinary Research ({CMMR})}, pages = {285--297}, address = {London, UK}, year = {2025}, doi = {10.5281/zenodo.17496630}, url-pdf = {2025_MeierSSMB_RealTimeSWIPE_CMMR_ePrint.pdf}, url-demo = {https://audiolabs-erlangen.de/resources/MIR/2025-CMMR-RTSWIPE}, url-code = {https://github.com/groupmm/real_time_swipe} } - Peter Meier, Meinard Müller, and Stefan Balke

A Multi-User Interface for Real-Time Intonation Monitoring in Music Ensembles

In Proceedings Mensch und Computer, Workshop für Innovative Computerbasierte Musikinterfaces (ICMI), 2025. PDF DOI@inproceedings{MeierMB25_IntonationMonitoring_ICMI, author = {Peter Meier and Meinard M{\"u}ller and Stefan Balke}, title = {A Multi-User Interface for Real-Time Intonation Monitoring in Music Ensembles}, booktitle = {Proceedings Mensch und Computer, Workshop f{\"u}r Innovative Computerbasierte Musikinterfaces ({ICMI})}, year = {2025}, publisher = {Gesellschaft f{\"u}r Informatik {e.V.}}, address = {Chemnitz, Germany}, doi = {10.18420/muc2025-mci-ws06-202}, url-pdf = {2025_MeierMB_IntonationMonitoring_ICMI_ePrint.pdf} } - Stefan Balke, Peter Meier, and Meinard Müller

Practicing Alone, Playing Together: A Persona-Based Design Approach for Amateur Wind Musicians

In Proceedings Mensch und Computer, Workshop für Innovative Computerbasierte Musikinterfaces (ICMI), 2025. PDF DOI@inproceedings{BalkeMM25_PersonaDesignWindMusic_ICMI, author = {Stefan Balke and Peter Meier and Meinard M{\"u}ller}, title = {Practicing Alone, Playing Together: {A} Persona-Based Design Approach for Amateur Wind Musicians}, booktitle = {Proceedings Mensch und Computer, Workshop f{\"u}r Innovative Computerbasierte Musikinterfaces ({ICMI})}, year = {2025}, publisher = {Gesellschaft f{\"u}r Informatik {e.V.}}, address = {Chemnitz, Germany}, doi = {10.18420/muc2025-mci-ws06-199}, url-pdf = {2025_BalkeMM_PersonaDesignWindMusic_ICMI_ePrint.pdf} } - Christian Dittmar, Johannes Zeitler, Stefan Balke, Simon Schwär, and Meinard Müller

PULSE-IT: Lightweight and Expressive Synthesis of Wind Instrument Playing

In Late-Breaking and Demo Session of the International Conference on Music Information Retrieval (ISMIR): 1–3, 2025. PDF Demo@inproceedings{DittmarZBSM25_PulseIt_ISMIR-LBD, author = {Christian Dittmar and Johannes Zeitler and Stefan Balke and Simon Schw{\"a}r and Meinard M{\"u}ller}, title = {{PULSE-IT}: Lightweight and Expressive Synthesis of Wind Instrument Playing}, booktitle = {Late-Breaking and Demo Session of the International Conference on Music Information Retrieval ({ISMIR})}, address = {Daejeon, South Korea}, pages = {1--3}, year = {2025}, url-pdf = {2025_DittmarZBSM_PulseIt_ISMIR-LBD.pdf}, url-demo= {https://www.audiolabs-erlangen.de/resources/MIR/2025_DittmarZBSM_WindInstrumentSynth_ISMIR-LBD} } - Sebastian Strahl and Meinard Müller

Semi-Supervised Piano Transcription Using Pseudo-Labeling Techniques

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 173–181, 2024. PDF Details DOI@inproceedings{StrahlM24_PianoTranscriptionSemiSup_ISMIR, author = {Sebastian Strahl and Meinard M{\"u}ller}, title = {Semi-Supervised Piano Transcription Using Pseudo-Labeling Techniques}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, pages = {173--181}, address = {San Francisco, CA, United States}, year = {2024}, doi = {10.5281/zenodo.14877303}, url-details = {https://www.audiolabs-erlangen.de/resources/MIR/2024-ISMIR-PianoTranscriptionSemiSup}, url-pdf = {2024_StrahlM_PianoTranscriptionSemiSup_ISMIR_ePrint.pdf}, } - Johannes Zeitler, Ben Maman, and Meinard Müller

Robust and Accurate Audio Synchronization Using Raw Features From Transcription Models

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 120–127, 2024. PDF DOI@inproceedings{ZeitlerMM24_SyncRawTranscription_ISMIR, author = {Johannes Zeitler and Ben Maman and Meinard M{\"u}ller}, title = {Robust and Accurate Audio Synchronization Using Raw Features From Transcription Models}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, address = {San Francisco, CA, United States}, year = {2024}, pages = {120--127}, doi = {10.5281/zenodo.14877291}, url-pdf = {2024_ZeitlerMM_SyncRawTranscription_ISMIR_ePrint.pdf} } - Johannes Zeitler, Michael Krause, and Meinard Müller

Soft Dynamic Time Warping with Variable Step Weights

In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2024. Demo DOI@inproceedings{ZeitlerKM24_VariableStepSoftDTW_ICASSP, author = {Johannes Zeitler and Michael Krause and Meinard M{\"u}ller}, title = {Soft Dynamic Time Warping with Variable Step Weights}, booktitle = {Proceedings of the {IEEE} International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {Seoul, Korea}, year = {2024}, doi = {10.1109/icassp48485.2024.1044657}, url-demo = {https://github.com/groupmm/weightedSDTW} } - Ben Maman, Meinard Müller, Johannes Zeitler, and Amit Bermano

Performance Conditioning for Diffusion-Based Multi-Instrument Music Synthesis

In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP): 5045–5049, 2024. PDF Demo DOI@inproceedings{MamanZMB24_PerformanceConditionMusicSynthesis_ICASSP, author = {Ben Maman and Meinard M{\"u}ller and Johannes Zeitler and Amit Bermano}, title = {Performance Conditioning for Diffusion-Based Multi-Instrument Music Synthesis}, booktitle = {Proceedings of the {IEEE} International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {Seoul, Korea}, year = {2024}, pages = {5045--5049}, doi = {10.1109/ICASSP48485.2024.10445979}, url-pdf = {2024_MamanZMB_PerformanceConditionMusicSynthesis_ICASSP_arXiv.pdf}, url-demo = {https://benadar293.github.io/midipm/} } - Gaël Richard, Vincent Lostanlen, Yi-Hsuan Yang, and Meinard Müller

Model-Based Deep Learning for Music Information Research: Leveraging Diverse Knowledge Sources to Enhance Explainability, Controllability, and Resource Efficiency

IEEE Signal Processing Magazine, 41(6): 51–59, 2024. PDF Details DOI@article{RichardLYM24_MusicModelDL_IEEE-SPM, author = {Ga{\"e}l Richard and Vincent Lostanlen and Yi-Hsuan Yang and Meinard M{\"u}ller}, title = {Model-Based Deep Learning for {M}usic {I}nformation {R}esearch: {L}everaging Diverse Knowledge Sources to Enhance Explainability, Controllability, and Resource Efficiency}, journal = {{IEEE} Signal Processing Magazine}, volume = {41}, number = {6}, pages = {51--59}, year = {2024}, doi = {10.1109/MSP.2024.3415569}, url-details = https://ieeexplore.ieee.org/document/10819669}, url-pdf = {2024_RichardLYM_MusicModelDL_IEEE-SPM_Preprint.pdf} } - Peter Meier, Ching-Yu Chiu, and Meinard Müller

A Real-Time Beat Tracking System with Zero Latency and Enhanced Controllability

Transaction of the International Society for Music Information Retrieval (TISMIR), 7(1): 213–227, 2024. PDF DOI@article{MeierCM24_RealTimePLP_TISMIR, author = {Peter Meier and Ching-Yu Chiu and Meinard M{\"u}ller}, title = {A Real-Time Beat Tracking System with Zero Latency and Enhanced Controllability}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {7}, number = {1}, pages = {213--227}, year = {2024}, doi = {10.5334/tismir.189}, url-pdf = {2024_MeierCM_RealTimePLP_TISMIR_ePrint.pdf}, } - Peter Meier, Simon Schwär, and Meinard Müller

A Real-Time Approach for Estimating Pulse Tracking Parameters for Beat-Synchronous Audio Effects

In Proceedings of the International Conference on Digital Audio Effects (DAFx): 314–321, 2024. PDF Demo@inproceedings{MeierSM24_RealTimePLP_DAFx, author = {Peter Meier and Simon Schw{\"a}r and Meinard M{\"u}ller}, title = {A Real-Time Approach for Estimating Pulse Tracking Parameters for Beat-Synchronous Audio Effects}, booktitle = {Proceedings of the International Conference on Digital Audio Effects ({DAFx})}, address = {Guildford, Surrey, {UK}}, year = {2024}, pages = {314--321}, url-demo = {https://audiolabs-erlangen.de/resources/MIR/2024-DAFx-RealTimePLP}, url-pdf = {2024_MeierSM_RealTimePLP_DAFx.pdf} } - Johannes Zeitler, Christof Weiß, Vlora Arifi-Müller, and Meinard Müller

BPSD: A Coherent Multi-Version Dataset for Analyzing the First Movements of Beethoven's Piano Sonatas

Transaction of the International Society for Music Information Retrieval (TISMIR), 7(1): 195–212, 2024. PDF Demo DOI@article{ZeitlerWAM24_BPSD_TISMIR, author = {Johannes Zeitler and Christof Wei{\ss} and Vlora Arifi-M{\"u}ller and Meinard M{\"u}ller}, title = {{BPSD}: {A} Coherent Multi-Version Dataset for Analyzing the First Movements of {B}eethoven's Piano Sonatas}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {7}, number = {1}, pages = {195--212}, year = {2024}, doi = {10.5334/tismir.196}, url-pdf = {2024_ZeitlerWAM24_BPSD_TISMIR_ePrint.pdf}, url-demo = {https://zenodo.org/records/12783403} } - Meinard Müller and Ching-Yu Chiu

A Basic Tutorial on Novelty and Activation Functions for Music Signal Processing

Transaction of the International Society for Music Information Retrieval (TISMIR), 7(1): 179–194, 2024. PDF Demo DOI@article{MuellerC24_TutorialNovelty_TISMIR, author = {Meinard M{\"u}ller and Ching-Yu Chiu}, title = {A Basic Tutorial on Novelty and Activation Functions for Music Signal Processing}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {7}, number = {1}, pages = {179--194}, year = {2024}, doi = {10.5334/tismir.202}, url-pdf = {2024_Mueller_TutorialNovelty_TISMIR_ePrint.pdf}, url-demo = {https://github.com/groupmm/edu_novfct} } - Ching-Yu Chiu, Johannes Zeitler, Vlora Arifi-Müller, and Meinard Müller

Downbeat Tracking for Western Classical Music Recordings: A Case Study for Beethoven Piano Sonatas

In Proceedings of the Deutsche Jahrestagung für Akustik (DAGA): 1–4, 2024. PDF@inproceedings{ChiuZAM24_DownbeatBeethoven_DAGA, author = {Ching-Yu Chiu and Johannes Zeitler and Vlora Arifi-M{\"u}ller and Meinard M{\"u}ller}, title = {Downbeat Tracking for {W}estern Classical Music Recordings: {A} Case Study for {B}eethoven Piano Sonatas}, booktitle = {Proceedings of the {D}eutsche {J}ahrestagung f{\"u}r {A}kustik ({DAGA})}, address = {Hannover, Germany}, year = {2024}, pages = {1--4}, url-pdf = {2024_ChiuZAM_DownbeatBeethoven_DAGA.pdf} } - Meinard Müller, Simon Dixon, Anja Volk, Bob L. T. Sturm, Preeti Rao, and Mark Gotham

Introducing the TISMIR Education Track: What, Why, How?

Transaction of the International Society for Music Information Retrieval (TISMIR), 7(1): 85–98, 2024. PDF Details DOI@article{MuellerDVSRG24_RealTimePLP_TISMIR, author = {Meinard M{\"u}ller and Simon Dixon and Anja Volk and Bob L. T. Sturm and Preeti Rao and Mark Gotham}, title = {Introducing the {TISMIR} Education Track: {W}hat, Why, How?}, journal = {Transaction of the International Society for Music Information Retrieval ({TISMIR})}, volume = {7}, number = {1}, pages = {85--98}, year = {2024}, doi = {10.5334/tismir.199}, url-details = {https://transactions.ismir.net/articles/10.5334/tismir.199}, url-pdf = {2024_MuellerEtAl_EditorialEducation_TISMIR_ePrint.pdf} } - Yigitcan Özer, Leo Brütting, Simon Schwär, and Meinard Müller

libsoni: A Python Toolbox for Sonifying Music Annotations and Feature Representations

Journal of Open Source Software (JOSS), 9(96): 1–6, 2024. PDF Demo DOI@article{OezerBSM24_SonificationToolbox_JOSS, author = {Yigitcan {\"O}zer and Leo Br{\"u}tting and Simon Schw{\"a}r and Meinard M{\"u}ller}, title = {libsoni: {A} {P}ython Toolbox for Sonifying Music Annotations and Feature Representations}, journal = {Journal of Open Source Software ({JOSS})}, volume = {9}, number = {96}, year = {2024}, pages = {1--6}, doi = {10.21105/joss.06524}, url-demo = {https://github.com/groupmm/libsoni}, url-pdf = {2024_OezerBSM_SonificationToolbox_JOSS_ePrint.pdf} }

Projected-Related Student Projects and Thesis

- Research Internships. DNN-based music analysis with PyTorch, Summer 2025

- Peter Kodl (2025).

Computer-Assisted Visualization of Harmonic Structures in Music Recordings: A Case Study on Beethoven Piano Sonatas.

Bachelor Thesis, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), December 2025.

- Research Internships. DNN-based music analysis and synthesis, Summer 2024

- Rico Rosenbusch (2024).

Automated Real-Time Beat Tracking: Response Time and Confidence Analysis.

Bachelor Thesis, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), September 2024.

Demo - Ole Frederik Müermann (2024).

Real-Time Beat Tracking for Creative Music Production.

Bachelor Thesis, Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), August 2024.

Demo

Projected-Related Ph.D. Theses

- Peter Meier

Real-Time Algorithms for Beat Tracking and Pitch Estimation

PhD Thesis, Friedrich-Alexander-Universität Erlangen-Nürnberg, 2025. Details@phdthesis{Meier25_RealTimeBeatPitch_PhD, author = {Peter Meier}, year = {2025}, title = {Real-Time Algorithms for Beat Tracking and Pitch Estimation}, school = {Friedrich-Alexander-Universit{\"a}t Erlangen-N{\"u}rnberg}, url-details = {}, url-pdf = {} }