Demos and Interfaces

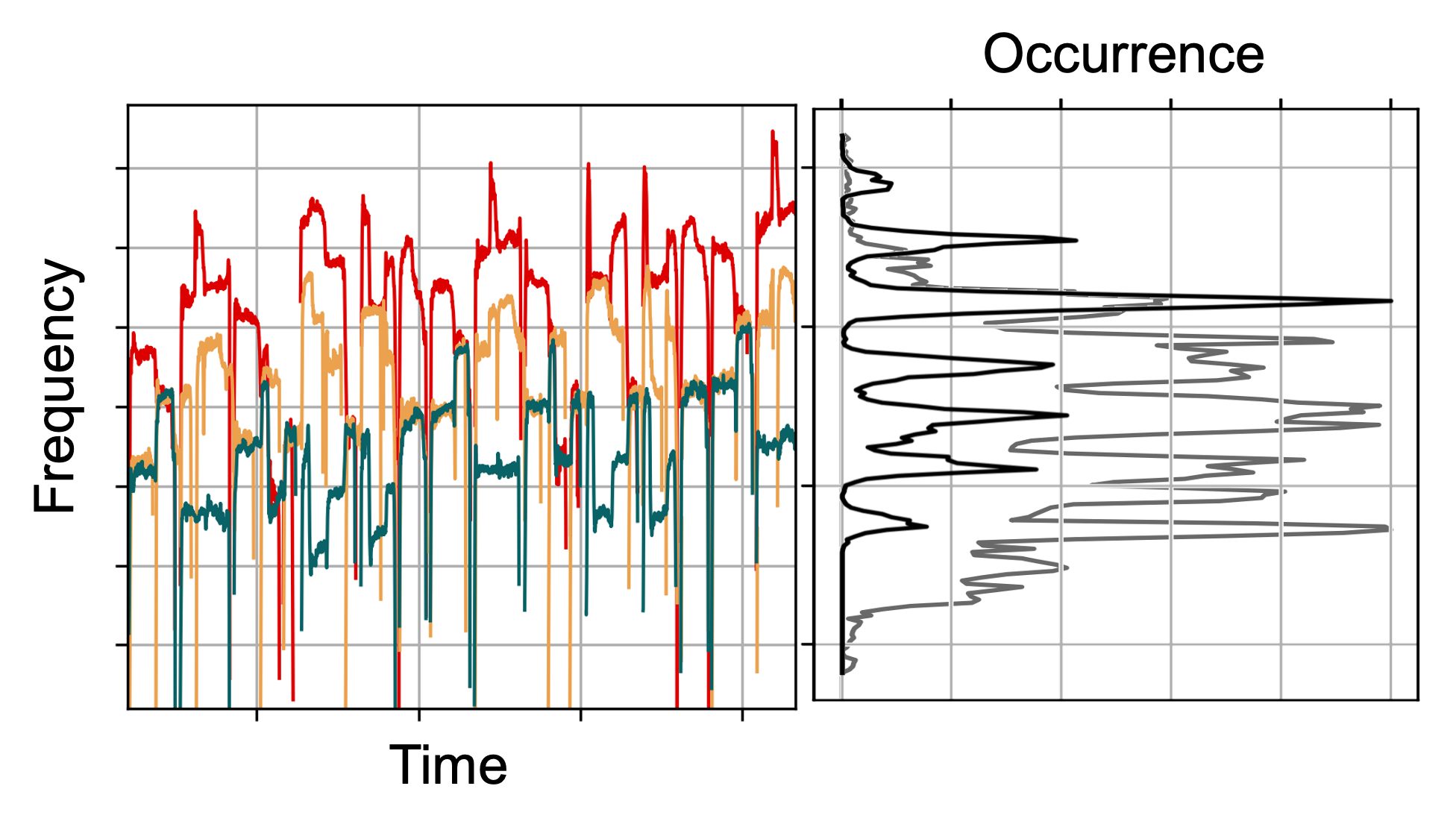

Computer-Assisted Analysis of Field Recordings: A Case Study of Georgian Funeral Songs

Link: Dataset

| Three-voiced funeral songs from Svaneti in North-West Georgia (also referred to as Zär) are believed to represent one of Georgia’s oldest preserved forms of collective music-making. Throughout a Zär performance, the singers often jointly and intentionally drift upwards in pitch. Furthermore, the singers tend to use pitch slides at the beginning and end of sung notes. As part of a study on interactive computational tools for tonal analysis of Zär, we compiled a dataset from the previously annotated audio material, which we release under an open-source license for research purposes. |  |

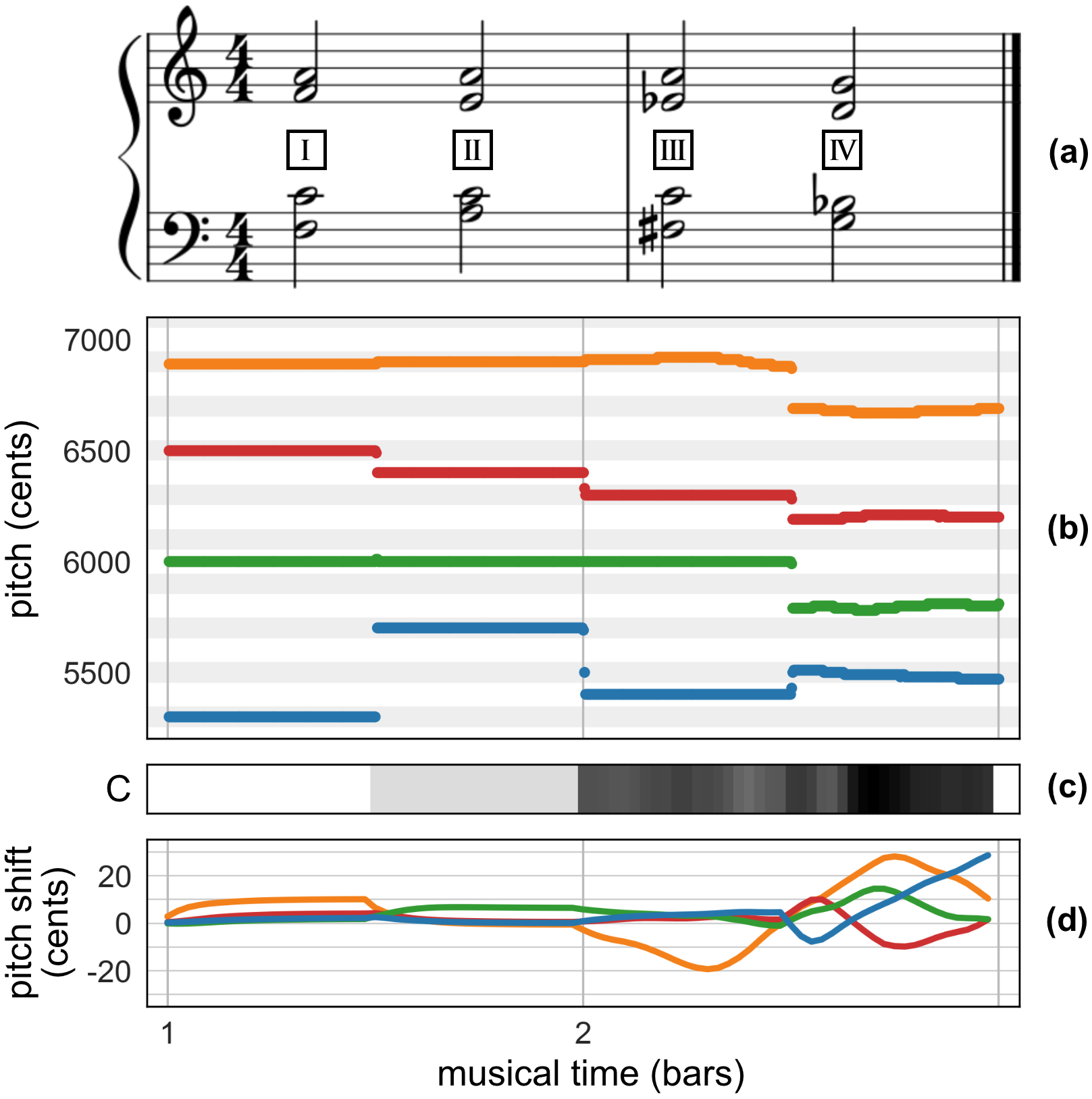

A Differentiable Cost Measure for Intonation Processing in Polyphonic Music

Link: Demo

| Intonation is the process of choosing an appropriate pitch for a given note in a musical performance. Particularly in polyphonic singing, where all musicians can continuously adapt their pitch, this leads to complex interactions. To achieve an overall balanced sound, the musicians dynamically adjust their intonation considering musical, perceptual, and acoustical aspects. When adapting the intonation in a recorded performance, a sound engineer may have to individually fine-tune the pitches of all voices to account for these aspects in a similar way. In this paper, we formulate intonation adaptation as a cost minimization problem. As our main contribution, we introduce a differentiable cost measure by adapting and combining existing principles for measuring intonation. In particular, our measure consists of two terms, representing a tonal aspect (the proximity to a tonal grid) and a harmonic aspect (the perceptual dissonance between salient frequencies). We show that, combining these two aspects, our measure can be used to flexibly account for different artistic intents while allowing for robust and joint processing of multiple voices in real-time. In an experiment, we demonstrate the potential of our approach for the task of intonation adaptation of amateur choral music using recordings from a publicly available multitrack dataset. |  |

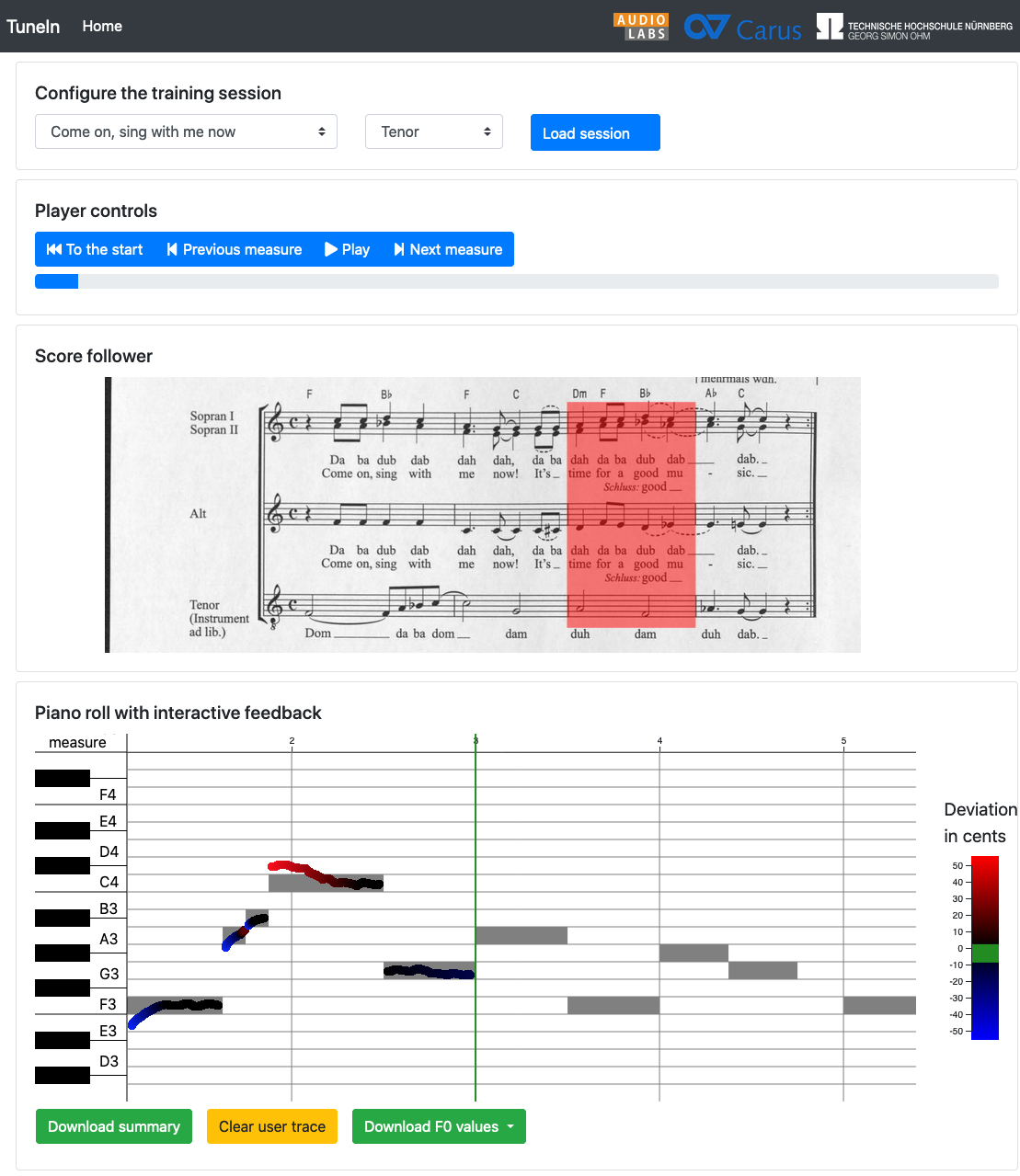

TuneIn: A Web-Based Interface for Practicing Choral Parts

Link: Web-based Interface

| Choir singers typically practice their choral parts individually in preparation for joint rehearsals. Over the last years, applications have become popular that support individual rehearsals, e.g., with sing-along and score-following functionalities. In this work, we present a web-based interface with real-time intonation feedback for choir rehearsal preparation. The interface combines several open-source tools that have been developed by the MIR community. |  |

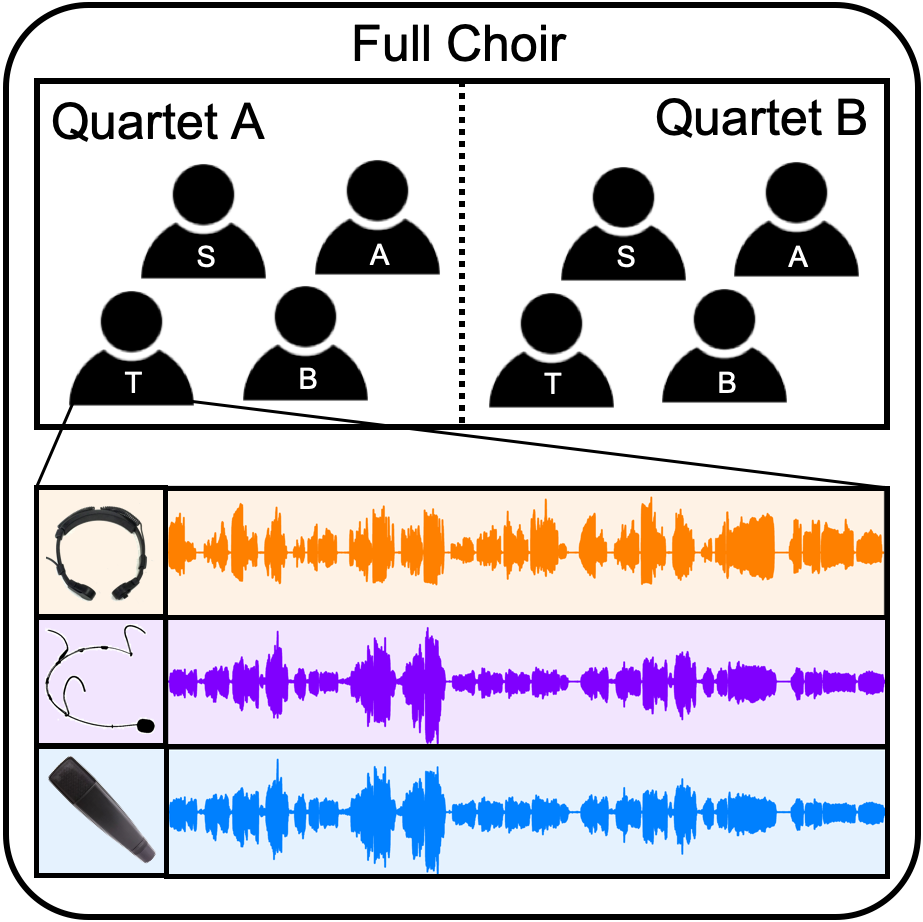

Dagstuhl ChoirSet: A Multitrack Dataset for MIR Research on Choral Singing

Link: Web-based Interface

| Choral singing is a central part of musical cultures across the world, yet many facets of this widespread form of polyphonic singing are still to be explored. Music information retrieval (MIR) research on choral singing benefits from multitrack recordings of the individual singing voices. However, there exist only few publicly available multitrack datasets on polyphonic singing. In this paper, we present Dagstuhl ChoirSet (DCS), a multitrack dataset of a cappella choral music designed to support MIR research on choral singing. The dataset includes recordings of an amateur vocal ensemble performing two choir pieces in full choir and quartet settings. The audio data was recorded during an MIR seminar at Schloss Dagstuhl using different close-up microphones to capture the individual singers’ voices. |  |

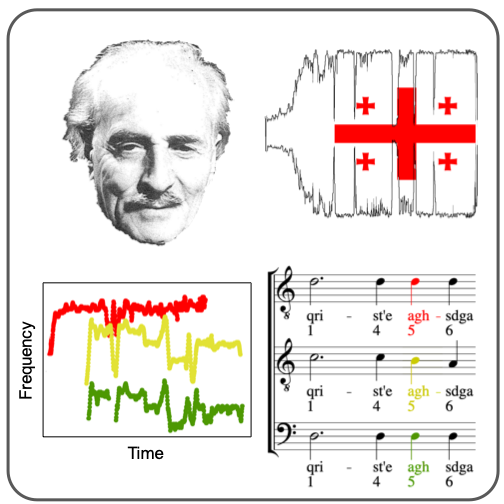

Erkomaishvili Dataset: A Curated Corpus of Traditional Georgian Vocal Music for Computational Musicology

Link: Demo

| The analysis of recorded audio material using computational methods has received increased attention in ethnomusicological research. We present a curated dataset of traditional Georgian vocal music for computational musicology. The corpus is based on historic tape recordings of three-voice Georgian songs performed by the the former master chanter Artem Erkomaishvili. In this article, we give a detailed overview on the audio material, transcriptions, and annotations contained in the dataset. Beyond its importance for ethnomusicological research, this carefully organized and annotated corpus constitutes a challenging scenario for music information retrieval tasks such as fundamental frequency estimation, onset detection, and score-to-audio alignment. The corpus is publicly available and accessible through score-following web-players. |  |

A new archive of multichannel-multimedia field recordings of traditional Georgian singing, praying, and lamenting with special emphasis on Svaneti

Link: Interface

| This unique collection of 216 traditional Georgian chants was recorded by Frank Scherbaum and Nana Mzhavanadze during an exploratory field trip to Upper Svaneti/Georgia in summer 2016. The recordings comprise various chants sung by different groups and singers in different villages. A special property of this collection is the variety of recording devices used. Besides a camcorder for video recording and conventional stereo microphones to capture the overall impression, each of the singers was recorded separately with a headset microphone and a larynx/throat microphone. |  |

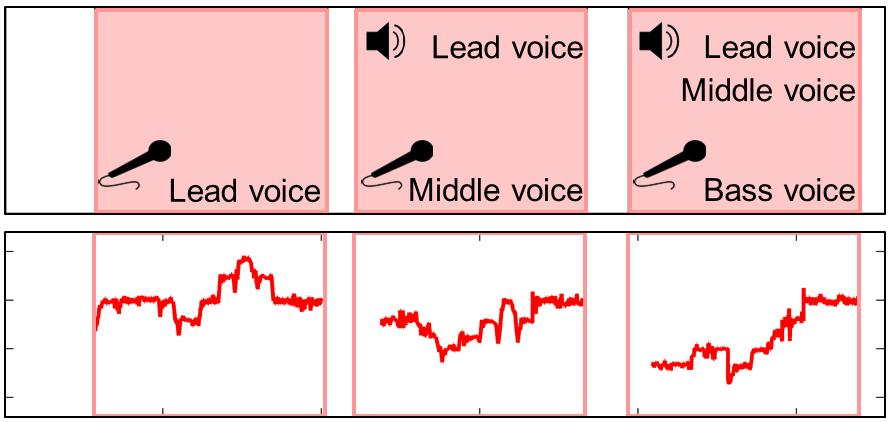

Throat Microphones for Vocal Music Analysis

Link: Demo

| Due to the complex nature of the human voice, the computational analysis of polyphonic vocal music recordings constitutes a challenging scenario. Development and evaluation of automated music processing methods often rely on multitrack recordings comprising one or several tracks per voice. However, recording singers separately is neither always possible, nor is it generally desirable. As a consequence, producing clean recordings of individual voices for computational analysis is problematic. In this context, one may use throat microphones which capture the vibrations of a singers’ throat, thus being robust to other surrounding acoustic sources. In this contribution, we sketch the potential of such microphones for music information retrieval tasks such as melody extraction. Furthermore, we report on first experiments conducted in the course of a recent project on computational ethnomusicology, where we use throat microphones to analyze traditional three-voice Georgian vocal music. |  |

Fundamental Frequency Annotations for Georgian Chant Recordings

Link: Website for the Dataset and Annotations

| The analysis of recorded audio sources has become increasingly important in ethnomusicological research. Such audio material may contain important cues on performance practice, information that is often lost in manually generated symbolic music transcriptions. In collaboration with Frank Scherbaum (Universität Potsdam, Germany), we considered a musically relevant audio collection that consists of more than 100 three-voice polyphonic Georgian chants. These songs were performed by Artem Erkomaishvili (1887–1967)—one of the last representative of the master chanters of Georgian music—in a three-stage recording process. This website provides the segment annotations as well as the F0 annotations for each of the songs in a simple CSV format. Furthermore, visualizations and sonifications of the F0 trajectories are provided. |  |