Demos

Pulsetable Synthesis of Wind Instruments

Wind instruments enable highly expressive performances through playing techniques such as vibrato, slurs, and growl. In this demo, we introduce PULSE-IT, a lightweight synthesis method that combines simple signal processing components with data-driven control signals. Based on a pulsetable oscillator and time-variant filtering, PULSE-IT supports both re-synthesis and cross-synthesis (timbre transfer) of expressive wind instrument playing at low computational cost. Despite its simplicity, the method yields convincing results and provides a practical alternative to more complex neural approaches.

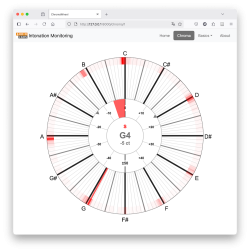

Real-Time Intonation Monitoring in Music Ensembles

In this work, we explore the possibilities of a real-time, multi-user intonation monitoring system specifically developed for music ensembles. It provides immediate, role-specific feedback to both musicians and conductors, supporting collaborative rehearsal practices and ensemble tuning processes. We conducted an initial experiment in a rehearsal setting with four musicians and a conductor, focusing on a qualitative evaluation of usability, interpretability, and musical relevance. The preliminary findings indicate that the system offers clear and practical feedback, promotes awareness of intonation, and has the potential to enhance interaction and facilitate communication within the ensemble.

JazzTube

Web services allow permanent access to music from all over the world. Especially in the case of web services with user-supplied content, e.g., YouTube, the available metadata is often incomplete or erroneous. On the other hand, a vast amount of high-quality and musically relevant metadata has been annotated in research areas such as Music Information Retrieval (MIR). Although they have great potential, these musical annotations are often inaccessible to users outside the academic world. With our contribution, we want to bridge this gap by enriching publicly available multimedia content with musical annotations available in research corpora, while maintaining easy access to the underlying data. JazzTube lets you explore the annotations from the Weimar Jazz Database (WJD) in intuitive and interactive ways. Experience an enriched listening experience by exploring famous jazz recordings along with solo transcriptions and further information about the featured soloists.

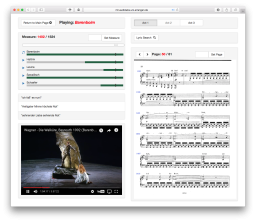

The Opera as a Multimedia Scenario

Music with its many representations can be seen as a multimedia scenario: There exist a number of different media objects (e.g., video recordings, lyrics, or sheet music) beside the actual music recording, which describe the music in different ways. In the course of digitization efforts, many of these media objects are nowadays publicly available on the Internet. However, the media objects are usually accessed individually without using their musical relationships. Using these relationships could open up new ways of navigating and interacting with the music. In this work, we model these relationships by taking the opera Die Walküre by Richard Wagner as a case study. As a first step, we describe the opera as a multimedia scenario and introduce the considered media objects. By using manual annotations, we establish mutual relationships between the media objects. These relationships are then modeled in a database schema. Finally, we preset a web-based demonstrator which offers several ways of navigation within the opera recordings and allows for accessing the media objects in a user-friendly way.

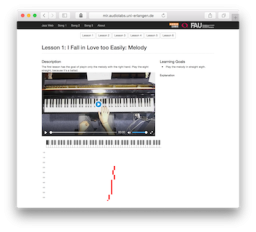

Web-based Jazz Piano Education Tool

To learn an instrument, many people acquire the necessary sensorimotor and musical skills by imitating their teachers. In the case of studying jazz improvisation, the student needs to learn fundamental harmonic principles. In this work, we indicate the potential of incorporating computer-assisted methods in jazz piano lessons. In particular, we present a web-based tool offers an easy interaction with the offered multimedia content. This tool enables the student to revise the lesson's content with the help of recorded and annotated examples in an individual tempo.

Deep Learning for Jazz Walking Bass Transcription

In this work, we focus on transcribing walking bass lines, which provide clues for revealing the actual played chords in jazz recordings. Our transcription method is based on a deep neural network (DNN) that learns a mapping from a mixture spectrogram to a salience representation that emphasizes the bass line. Furthermore, using beat positions, we apply a late-fusion approach to obtain beat-wise pitch estimates of the bass line. First, our results show that this DNN-based transcription approach outperforms state-of-the-art transcription methods for the given task. Second, we found that an augmentation of the training set using pitch shifting improves the model performance. Finally, we present a semi-supervised learning approach where additional training data is generated from predictions on unlabeled datasets.

Data-Driven Solo Voice Enhancement for Jazz Music Retrieval

Retrieving short monophonic queries in music recordings is a challenging research problem in Music Information Retrieval (MIR). In jazz music, given a solo transcription, one retrieval task is to find the corresponding (potentially polyphonic) recording in a music collection. Many conventional systems approach such retrieval tasks by first extracting the predominant F0-trajectory from the recording, then quantizing the extracted trajectory to musical pitches and finally comparing the resulting pitch sequence to the monophonic query. In this paper, we introduce a data-driven approach that avoids the hard decisions involved in conventional approaches: Given pairs of time-frequency (TF) representations of full music recordings and TF representations of solo transcriptions, we use a DNN-based approach to learn a mapping for transforming a "polyphonic" TF representation into a "monophonic" TF representation. This transform can be considered as a kind of solo voice enhancement. We evaluate our approach within a jazz solo retrieval scenario and compare it to a state-of-the-art method for predominant melody extraction.

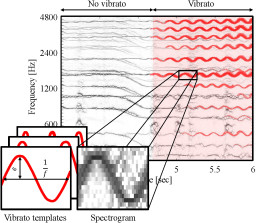

Template-Based Vibrato Analysis of Music Signals

The automated analysis of vibrato in complex music signals is a highly challenging task. A common strategy is to proceed in a two-step fashion. First, a fundamental frequency (F0) trajectory for the musical voice that is likely to exhibit vibrato is estimated. In a second step, the trajectory is then analyzed with respect to periodic frequency modulations. As a major drawback, however, such a method cannot recover from errors made in the inherently difficult first step, which severely limits the performance during the second step. In this work, we present a novel vibrato analysis approach that avoids the first error-prone F0-estimation step. Our core idea is to perform the analysis directly on a signal's spectrogram representation where vibrato is evident in the form of characteristic spectro-temporal patterns. We detect and parameterize these patterns by locally comparing the spectrogram with a predefined set of vibrato templates. Our systematic experiments indicate that this approach is more robust than F0-based strategies.