Notewise Evaluation for Music Source Separation: A Case Study for Separated Piano Tracks

This is the accompanying website for the submission Notewise Evaluation for Music Source Separation:A Case Study for Separated Piano Tracks.

- Yigitcan Özer, Hans-Ulrich Berendes, Vlora Arifi-Müller, Fabian-Robert Stöter, and Meinard Müller

Notewise Evaluation for Music Source Separation: A Case Study for Separated Piano Tracks

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR) (to appear), 2024.@inproceedings{OezerBASM24_NotewiseEvalPiano_ISMIR, author = {Yigitcan {\"O}zer and Hans-Ulrich Berendes and Vlora Arifi-M{\"u}ller and Fabian{-}Robert St{\"o}ter and Meinard M{\"u}ller}, title = {Notewise Evaluation for Music Source Separation: A Case Study for Separated Piano Tracks}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR}) (to appear)}, address = {San Francisco, USA}, year = {2024} }

Abstract

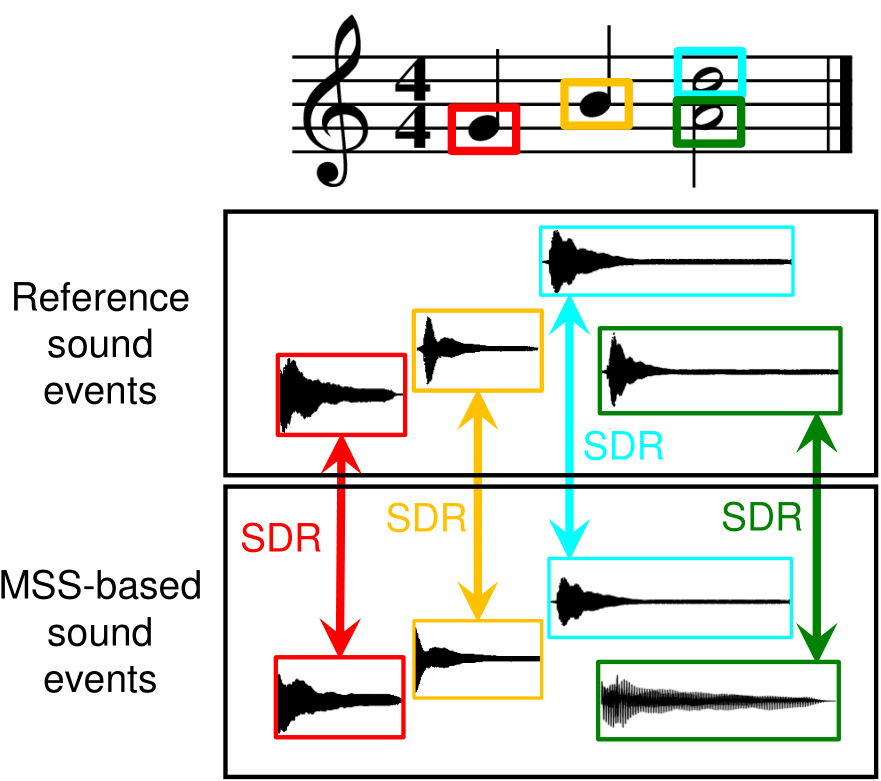

| Deep learning has significantly advanced music source separation (MSS), aiming to decompose music recordings into individual tracks corresponding to singing or specific instruments.Typically, results are evaluated using quantitative measures like signal-to-distortion ratio (SDR) computed for entire excerpts or songs.As the main contribution of this article, we introduce a novel evaluation approach that decomposes an audio track into musically meaningful sound events and applies the evaluation metric based on these units. In a case study, we apply this strategy to the challenging task of separating piano concerto recordings into piano and orchestra tracks. To assess piano separation quality, we use a score-informed nonnegative matrix factorization approach to decompose the reference and separated piano tracks into notewise sound events. In our experiments assessing various MSS systems, we demonstrate that our notewise evaluation, which takes into account factors such as pitch range and musical complexity, enhances the comprehension of both the results of source separation and the intricacies within the underlying music. |  |

Test Dataset

For assessing the quantitative and subjective evaluation of our experiments, we use the dry recordings without artificial reverberation from the Piano Concerto Dataset (PCD) as our test dataset. Additionally, we generated piano scores for all the excerpts. We then employed music synchronization techniques} to align these scores with all recorded excerpts. As an additional contribution, we release these annotations, thereby adding a score-based layer to the PCD collection.

Source Code

For the reproducibility of the results, we provide the open-source code in our GitHub repository.

Results

To have an overview of the right-hand (RH) and left-hand (LH) decomposition of the separated piano tracks, see a subset of the decomposed piano recordings here.

For an overview of the decomposition of all test samples (81) from PCD, click here. Please note that loading the page might take a while due to the large number of audio examples.

References

- Yigitcan Özer and Meinard Müller

Source Separation of Piano Concertos Using Musically Motivated Augmentation Techniques

IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), 32: 1214–1225, 2024. Demo DOI@article{OezerM24_PCSeparation_TASLP, author = {Yigitcan {\"O}zer and Meinard M{\"u}ller}, title = {Source Separation of Piano Concertos Using Musically Motivated Augmentation Techniques}, journal = {{IEEE/ACM} Transactions on Audio, Speech, and Language Processing ({TASLP})}, year = {2024}, volume = {32}, pages = {1214--1225}, doi = {10.1109/TASLP.2024.3356980}, url-demo = {https://www.audiolabs-erlangen.de/resources/MIR/2024-TASLP-PianoConcertoSeparation} } - Yigitcan Özer, Simon Schwär, Vlora Arifi-Müller, Jeremy Lawrence, Emre Sen, and Meinard Müller

Piano Concerto Dataset (PCD): A Multitrack Dataset of Piano Concertos

Transactions of the International Society for Music Information Retrieval (TISMIR), 6(1): 75–88, 2023. Demo DOI@article{OezerSALSM23_PCD_TISMIR, title = {{P}iano {C}oncerto {D}ataset {(PCD)}: A Multitrack Dataset of Piano Concertos}, author = {Yigitcan \"Ozer and Simon Schw\"ar and Vlora Arifi-M\"uller and Jeremy Lawrence and Emre Sen and Meinard M\"uller}, journal = {Transactions of the International Society for Music Information Retrieval ({TISMIR})}, volume = {6}, number = {1}, year = {2023}, pages = {75--88}, doi = {10.5334/tismir.160}, url-demo = {https://www.audiolabs-erlangen.de/resources/MIR/PCD} } - Yigitcan Özer and Meinard Müller

Source Separation of Piano Concertos with Test-Time Adaptation

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 493–500, 2022. Demo@inproceedings{OezerM22_PianoSepAdapt_ISMIR, author = {Yigitcan \"Ozer and Meinard M\"uller}, title = {Source Separation of Piano Concertos with Test-Time Adaptation}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, address = {Bengaluru, India}, year = {2022}, pages = {493--500}, url-demo = {https://www.audiolabs-erlangen.de/resources/MIR/2022-PianoSep} } - Emmanuel Vincent, R 'emi Gribonval, and C 'edric F 'evotte

Performance measurement in blind audio source separation

IEEE Transactions on Audio, Speech, and Language Processing, 14(4): 1462–1469, 2006.@article{VincentGF05_PerformanceMeasurement_IEEE-TASLP, author = {Emmanuel Vincent and R{\'e}mi Gribonval and C{\'e}dric F{\'e}votte}, title = {Performance measurement in blind audio source separation}, journal = {{IEEE} Transactions on Audio, Speech, and Language Processing}, year = {2006}, number = {4}, volume = {14}, pages = {1462--1469} } - Fabian-Robert Stöter, Stefan Uhlich, Antoine Liutkus, and Yuki Mitsufuji

Open-Unmix — A Reference Implementation for Music Source Separation

Journal of Open Source Software, 4(41), 2019. DOI@article{StoeterULM19_Unmix_JOSS, author = {Fabian{-}Robert St{\"{o}}ter and Stefan Uhlich and Antoine Liutkus and Yuki Mitsufuji}, title = {{Open-Unmix} -- {A} Reference Implementation for Music Source Separation}, journal = {Journal of Open Source Software}, volume = {4}, number = {41}, year = {2019}, url = {https://doi.org/10.21105/joss.01667}, doi = {10.21105/joss.01667} } - Romain Hennequin, Anis Khlif, Felix Voituret, and Manuel Moussallam

Spleeter: A Fast and Efficient Music Source Separation Tool with Pre-trained Models

Journal of Open Source Software, 5(50): 2154, 2020. DOI@article{HennequinKVM2020_Spleeter_JOSS, doi = {10.21105/joss.02154}, url = {https://doi.org/10.21105/joss.02154}, year = {2020}, publisher = {The Open Journal}, volume = {5}, number = {50}, pages = {2154}, author = {Romain Hennequin and Anis Khlif and Felix Voituret and Manuel Moussallam}, title = {Spleeter: A Fast and Efficient Music Source Separation Tool with Pre-trained Models}, journal = {Journal of Open Source Software}, note = {Deezer Research} } - Alexandre Défossez

Hybrid Spectrogram and Waveform Source Separation

In Proceedings of the ISMIR 2021 Workshop on Music Source Separation, 2021.@inproceedings{Defossez21_Demucs_ISMIR, author = {Alexandre Défossez}, title = {Hybrid Spectrogram and Waveform Source Separation}, booktitle = {Proceedings of the {ISMIR} 2021 Workshop on Music Source Separation}, year = {2021}, address = {Online} } - Alexandre Défossez, Nicolas Usunier, Léon Bottou, and Francis R. Bach

Music Source Separation in the Waveform Domain

2019.@misc{DefossezUBB21_MSSWaveFormDomain_arXiV, author = {Alexandre Défossez and Nicolas Usunier and Léon Bottou and Francis R. Bach}, title = {Music Source Separation in the Waveform Domain}, year = {2019}, url = {http://arxiv.org/abs/1911.13254}, eprint = {1911.13254}, } - Daniel D. Lee and H. Sebastian Seung

Algorithms for Non-negative Matrix Factorization

In Proceedings of the Neural Information Processing Systems (NIPS): 556–562, 2000.@inproceedings {LeeS00_AlgorithmsNmf_NIPS, author = {Daniel D. Lee and H. Sebastian Seung}, title = {Algorithms for Non-negative Matrix Factorization}, booktitle = {Proceedings of the Neural Information Processing Systems ({NIPS})}, address = {Denver, Colorado, USA}, year = {2000}, pages = {556--562}, month = {November}, } - Sebastian Ewert and Meinard Müller

Using Score-Informed Constraints for NMF-based Source Separation

In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP): 129–132, 2012. Details DOI@inproceedings{EwertM12_ScoreInformedNMF_ICASSP, author = {Sebastian Ewert and Meinard M{\"u}ller}, title = {Using Score-Informed Constraints for {NMF}-based Source Separation}, booktitle = {Proceedings of the {IEEE} International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {Kyoto, Japan}, year = {2012}, pages = {129--132}, doi = {10.1109/ICASSP.2012.6287834}, url-details = {http://resources.mpi-inf.mpg.de/MIR/ICASSP2012-ScoreInformedNMF/} } - Meinard Müller, Yigitcan Özer, Michael Krause, Thomas Prätzlich, and Jonathan Driedger

Sync Toolbox: A Python Package for Efficient, Robust, and Accurate Music Synchronization

Journal of Open Source Software (JOSS), 6(64): 1–4, 2021. DOI@article{MuellerOKPD21_SyncToolbox_JOSS, author = {Meinard M{\"u}ller and Yigitcan {\"O}zer and Michael Krause and Thomas Pr{\"a}tzlich and Jonathan Driedger}, title = {{S}ync {T}oolbox: {A} {P}ython Package for Efficient, Robust, and Accurate Music Synchronization}, journal = {Journal of Open Source Software ({JOSS})}, volume = {6}, number = {64}, year = {2021}, pages = {3434:1--4}, doi = {10.21105/joss.03434} } - Sebastian Ewert, Meinard Müller, and Peter Grosche

High Resolution Audio Synchronization Using Chroma Onset Features

In Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP): 1869–1872, 2009. Details DOI@inproceedings{EwertMG09_HighResAudioSync_ICASSP, author = {Sebastian Ewert and Meinard M{\"u}ller and Peter Grosche}, title = {High Resolution Audio Synchronization Using Chroma Onset Features}, booktitle = {Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {Taipei, Taiwan}, year = {2009}, pages = {1869--1872}, doi = {10.1109/ICASSP.2009.4959972}, url-details = {https://www.audiolabs-erlangen.de/resources/MIR/SyncRWC60} }

Acknowledgments

| This work was supported by the German Research Foundation (DFG MU 2686/10-2). The authors are with the International Audio Laboratories Erlangen, a joint institution of the Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) and Fraunhofer Institute for Integrated Circuits IIS. |  |