A Survey on Automatic Drum Transcription

This is the accompanying website for the following review article:

- Chih-Wei Wu, Christian Dittmar, Carl Southall, Richard Vogl, Gerhard Widmer, Jason Hockman, Meinard Müller, and Alexander Lerch

A Review of Automatic Drum Transcription

IEEE/ACM Transactions on Audio, Speech, and Language Processing, 26(9): 1457–1483, 2018. PDF Demo DOI@article{WuDSVWHML18_DrumTranscription_IEEE-TASLP, author = {Chih-Wei Wu and Christian Dittmar and Carl Southall and Richard Vogl and Gerhard Widmer and Jason Hockman and Meinard M{\"u}ller and Alexander Lerch}, title = {A Review of Automatic Drum Transcription}, journal = {{IEEE}/{ACM} Transactions on Audio, Speech, and Language Processing}, year={2018}, volume={26}, number={9}, pages={1457--1483}, doi = {10.1109/TASLP.2018.2830113}, url-pdf = {https://ieeexplore.ieee.org/document/8350302/}, url-demo={https://www.audiolabs-erlangen.de/resources/MIR/2017-DrumTranscription-Survey}, month={September}, }

Abstract

In Western popular music, drums and percussion are an important means to emphasize and shape the rhythm, often defining the musical style. If computers were able to analyze the drum part in recorded music, it would enable a variety of rhythm-related music processing tasks. Especially the detection and classification of drum sound events by computational methods is considered to be an important and challenging research problem in the broader field of Music Information Retrieval (MIR). Over the last two decades, several authors have attempted to tackle this problem under the umbrella term Automatic Drum Transcription (ADT) [1,2,3]. This paper presents a comprehensive overview of ADT research, including a thorough discussion of the task-specific challenges, categorization of existing techiniques, and evaluation of several state-of-the-art systems. To provide more insights on the practice of ADT systems, we focus on two families of ADT techniques, namely methods based on Non-negative Matrix Factorization (NMF) [4,5,6,7] and Recurrent Neural Networks (RNNs) [8,9,10]. We explain the methods’ technical details and drum-specific variations and evaluate these approaches on publicly available datasets with a consistent experimental setup. Finally, the open issues and under-explored areas in ADT research are identified and discussed, providing future directions in this field.

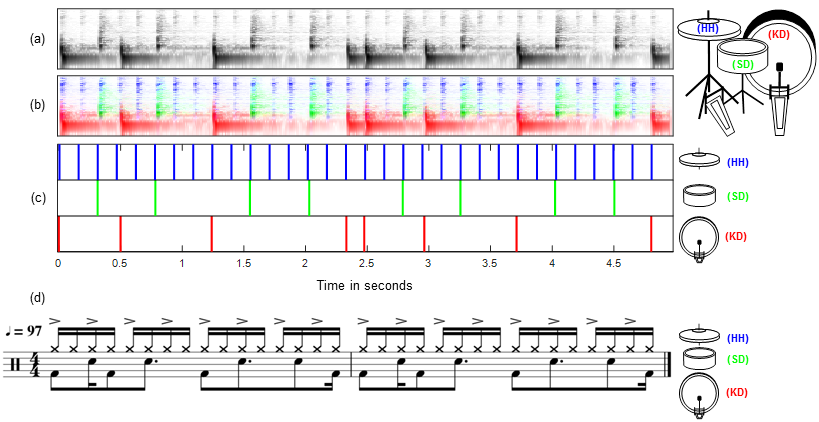

Illustration of Automatic Drum Transcription

(a) Example drum recording in a log-frequency spectrogram representation. Darker shades of gray encode higher energy.

(b) The same spectrogram representation with color-coded contributions of individual drum instruments.

(c) Target onsets displayed as discrete activation impulses.

(d) Drum notation of the example signal. The note symbols have been roughly aligned to the time axis of the figures above.

Selected Audio Examples

| ADT of Drum-only recordings: | [D-DTD] |

| ADT in the presence of Percussion: | [D-DTP] |

| ADT in the presence of Melodic instruments: | [D-DTM] |

Evaluation Results

The following tables provide links to the complete evaluation results of our comparison of state-of-the-art methods in CSV-format. Alternatively, you can download an Excel-file with all tables included here: [Complete Results]. The abbreviations used below are F (F-Measure), P (Precision), R (Recall).

| Test Dataset | Avg. F/P/R across instr. | Avg. F per instr. | Note | ||

| D-DTD | [Trained on D-DTD] | [Trained on D-DTD] | The bar-plots resulting from these numbers are shown in Fig. 11a, Fig. 12a, Fig. 13a | ||

| D-DTP | [Trained on D-DTP] | [Trained on D-DTP] | |||

| D-DTM | [Trained on D-DTM] | [Trained on D-DTM] | |||

| Test Dataset | Avg. F/P/R across instr. | Avg. F per subset | Avg. F per instr. | Note | |

| D-DTD | [Trained on D-DTD] | [Trained on D-DTD] | [Trained on D-DTD] | The bar-plots resulting from these numbers are shown in Fig. 11b, Fig. 12b, Fig. 13b | |

| D-DTP | [Trained on D-DTP] | [Trained on D-DTP] | [Trained on D-DTP] | ||

| D-DTM | [Trained on D-DTM] | [Trained on D-DTM] | [Trained on D-DTM] | ||

| Test Dataset | Avg. F/P/R across instr. | Avg. F per instr. | Avg. F/P/R across instr. | Avg. F per instr. | Note |

| D-DTD | [Trained on D-DTP] | [Trained on D-DTP] | [Trained on D-DTM] | [Trained on D-DTM] | The bar-plots resulting from these numbers are shown in Fig. 14, Fig. 15, Fig. 16 |

| D-DTP | [Trained on D-DTD] | [Trained on D-DTD] | [Trained on D-DTM] | [Trained on D-DTM] | |

| D-DTM | [Trained on D-DTD] | [Trained on D-DTD] | [Trained on D-DTP] | [Trained on D-DTP] | |

Reference Source Code

These links lead to source code of the algorithms compared in our experimental evaluation.

| NMF-Based: | [https://github.com/cwu307/NmfDrumToolbox] |

| RNN-Based: | [https://github.com/CarlSouthall/ADTLib] |

Evaluation Corpora

These links lead to the publicly available corpora we used for our experimental evaluation.

| IDMT-SMT-Drums: | [http://www.idmt.fraunhofer.de/en/business_units/smt/drums.html] |

| ENST-Drums: | [http://perso.telecom-paristech.fr/~grichard/ENST-drums/] |

References

- Derry FitzGerald

Automatic drum transcription and source separation

PhD Thesis, Dublin Institute of Technology, 2004.@phdthesis{Fitzgerald04_DrumTrans_PhD, author = {Derry FitzGerald}, year = {2004}, title = {Automatic drum transcription and source separation}, address = {Dublin, Ireland}, School = {Dublin Institute of Technology} } - Derry FitzGerald and Jouni Paulus

Unpitched percussion transcription

In Klapuri, Anssi and Davy, Manuel (ed.): Signal Processing Methods for Music Transcription, Springer: 131–162, 2006.@incollection{FitzGerald_2006_Overview_Unpitched, author = {Derry FitzGerald and Jouni Paulus}, booktitle = {Signal Processing Methods for Music Transcription}, editor = {Klapuri, Anssi and Davy, Manuel}, pages = {131--162}, publisher = {Springer}, title = {{Unpitched percussion transcription}}, year = {2006}, } - Jouni Paulus

Signal Processing Methods for Drum Transcription and Music Structure Analysis

PhD Thesis, Tampere University of Technology, 2009.@PhdThesis{Paulus09_DrumStructure_PhD, author = {Jouni Paulus}, title = {Signal Processing Methods for Drum Transcription and Music Structure Analysis}, school = {Tampere University of Technology}, year = {2009}, address = {Tampere, Finland} } - Henry Lindsay-Smith, Skot McDonald, and Mark Sandler

Drumkit Transcription via Convolutive NMF

In Proceedings of the International Conference on Digital Audio Effects (DAFx), 2012.@inproceedings{SmithMS12_DrumTranscriptionCNMF_DAFX, author = {Henry Lindsay-Smith and Skot McDonald and Mark Sandler}, title = {Drumkit Transcription via Convolutive {NMF}}, booktitle = {Proceedings of the International Conference on Digital Audio Effects ({DAFx})}, year = {2012}, month = {September}, address = {York, UK}, } - Christian Dittmar and Daniel Gärtner

Real-Time Transcription and Separation of Drum Recordings based on NMF Decomposition

In Proceedings of the International Conference on Digital Audio Effects (DAFx): 187–194, 2014.@inproceedings{DittmarG14_DrumTranscription_DAFX, author = {Christian Dittmar and Daniel G{\"a}rtner}, title = {Real-Time Transcription and Separation of Drum Recordings based on {NMF} Decomposition}, booktitle = {Proceedings of the International Conference on Digital Audio Effects ({DAFx})}, year = {2014}, address = {Erlangen, Germany}, month = {September}, pages={187--194}, } - Chih-Wei Wu and Alexander Lerch

Drum transcription using partially fixed non-negative matrix factorization

In Proceedings of the European Signal Processing Conference (EUSIPCO), 2015.@inproceedings{WuLerch_2015_pfnmf_EUSIPCO, author = {Chih-Wei Wu and Alexander Lerch}, booktitle = {Proceedings of the European Signal Processing Conference ({EUSIPCO})}, title = {{Drum transcription using partially fixed non-negative matrix factorization}}, year = {2015} } - Chih-Wei Wu and Alexander Lerch

Drum Transcription Using Partially Fixed Non-Negative Matrix Factorization with Template Adaptation

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 257–263, 2015.@inproceedings{WuLerch15_DrumTranscription_ISMIR, author = {Chih-Wei Wu and Alexander Lerch}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, title = {Drum Transcription Using Partially Fixed Non-Negative Matrix Factorization with Template Adaptation}, Pages = {257--263}, month = {October}, year = {2015}, address = {Malaga, Spain}, } - Richard Vogl, Matthias Dorfer, and Peter Knees

Recurrent Neural Networks for Drum Transcription

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 730–736, 2016.@inproceedings{VoglDK16_DrumTransRNN_ISMIR, author = {Richard Vogl and Matthias Dorfer and Peter Knees}, title = {Recurrent Neural Networks for Drum Transcription}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, address = {New York City, United States}, month = {August}, pages = {730--736}, year = {2016}, } - Carl Southall, Ryan Stables, and Jason Hockman

Automatic Drum Transcription Using Bi-Directional Recurrent Neural Networks

In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR): 591–597, 2016.@inproceedings{SouthallSH16_DrumTransRNN_ISMIR, author = {Carl Southall and Ryan Stables and Jason Hockman}, title = {Automatic Drum Transcription Using Bi-Directional Recurrent Neural Networks}, booktitle = {Proceedings of the International Society for Music Information Retrieval Conference ({ISMIR})}, address = {New York City, United States}, month = {August}, pages = {591--597}, year = {2016}, } - Richard Vogl, Matthias Dorfer, and Peter Knees

Drum Transcription from Polyphonic Music with Recurrent Neural Networks

In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP): 201–205, 2017.@inproceedings{VoglDK17_DrumTransLSTM_ICASSP, author = {Richard Vogl and Matthias Dorfer and Peter Knees}, title = {Drum Transcription from Polyphonic Music with Recurrent Neural Networks}, booktitle = {Proceedings of the {IEEE} International Conference on Acoustics, Speech, and Signal Processing ({ICASSP})}, address = {New Orleans, Louisiana, USA}, month = {Mar}, pages = {201--205}, year = {2017}, }