Finding Drum Breaks in Digital Music Recordings

This is the accompanying website for the following paper:

- Patricio López-Serrano, Christian Dittmar, and Meinard Müller

Finding Drum Breaks in Digital Music Recordings

In Proceedings of the International Symposium on Computer Music Modeling and Retrieval (CMMR), 2017.@inproceedings{LopezDM17_DrumBreaks_CMMR, author = {Patricio L\'{o}pez-Serrano and Christian Dittmar and Meinard M{\"u}ller}, title = {Finding Drum Breaks in Digital Music Recordings}, booktitle = {Proceedings of the International Symposium on Computer Music Modeling and Retrieval ({CMMR})}, address = {Porto, Portugal}, year = {2017} }

Abstract

DJs and producers of sample-based electronic dance music (EDM) use breakbeats as an essential building block and rhythmic foundation for their artistic work. The practice of reusing and resequencing sampled drum breaks critically influenced modern musical genres such as hip hop, drum'n'bass, and jungle. While EDM artists have primarily sourced drum breaks from funk, soul, and jazz recordings from the 1960s to 1980s, they can potentially be sampled from music of any genre. In this paper, we introduce and formalize the task of automatically finding suitable drum breaks in music recordings. By adapting an approach previously used for singing voice detection, we establish a first baseline for drum break detection. Besides a quantitative evaluation, we discuss benefits and limitations of our procedure by considering a number of challenging examples.

Dataset

Download a list of the tracks in our dataset [CSV file]. You can also explore the dataset and download segment annotations from the [dataset page].

James Brown - Funky Drummer [YouTube Link]

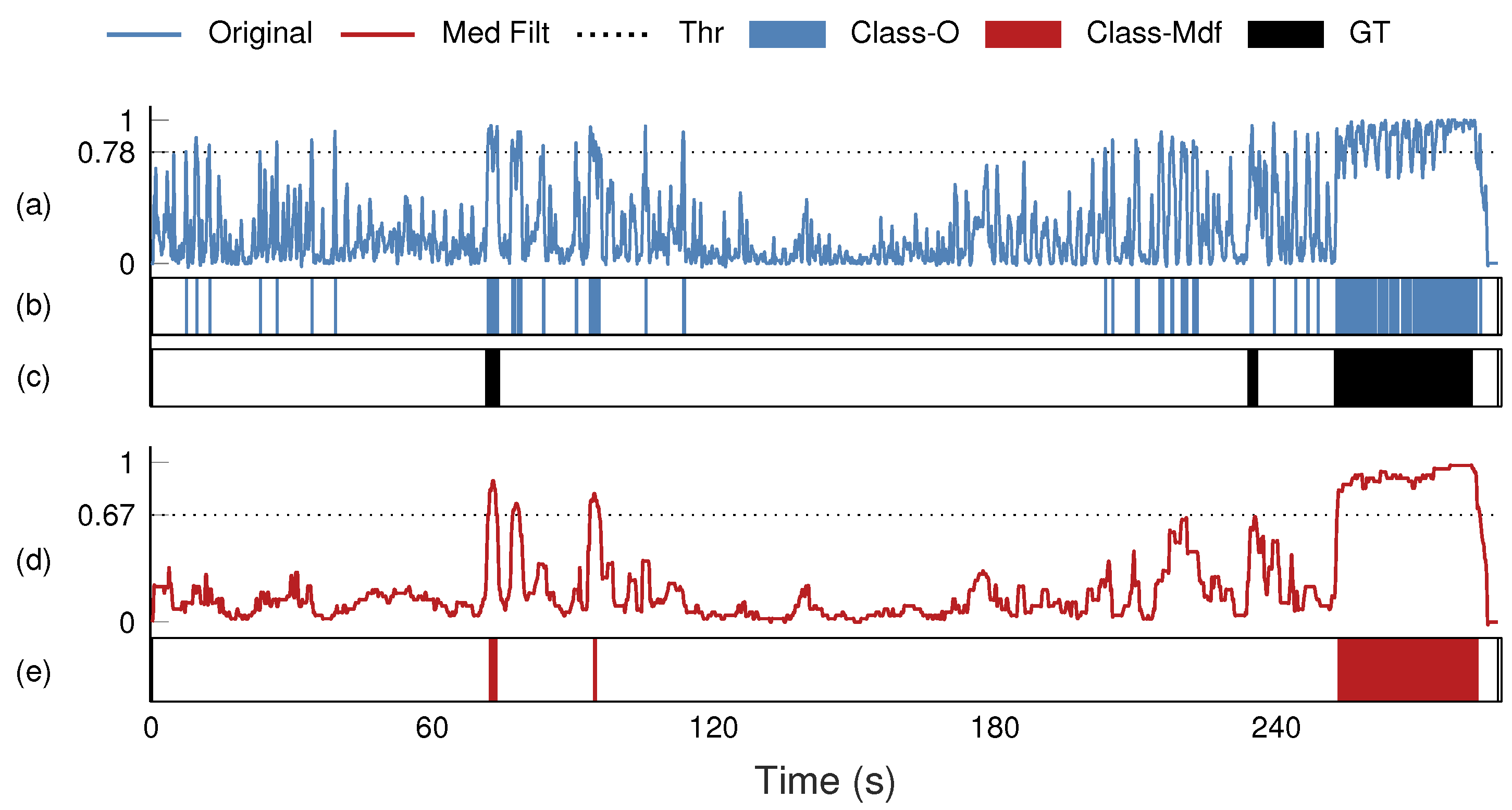

(a): Original, unprocessed decision function (solid blue curve); optimal threshold value (0.78, dotted black line). (b): Binary classification for original decision function (blue rectangles). (c): Ground truth annotation (black rectangles). (d): Decision function after median filtering with a filter length of 2.2 s (solid red curve); optimal threshold (0.67, dotted black line). (e): Binary classification for median-filtered decision function (red rectangles).

Dennis Coffey - Ride, Sally, Ride [YouTube Link]

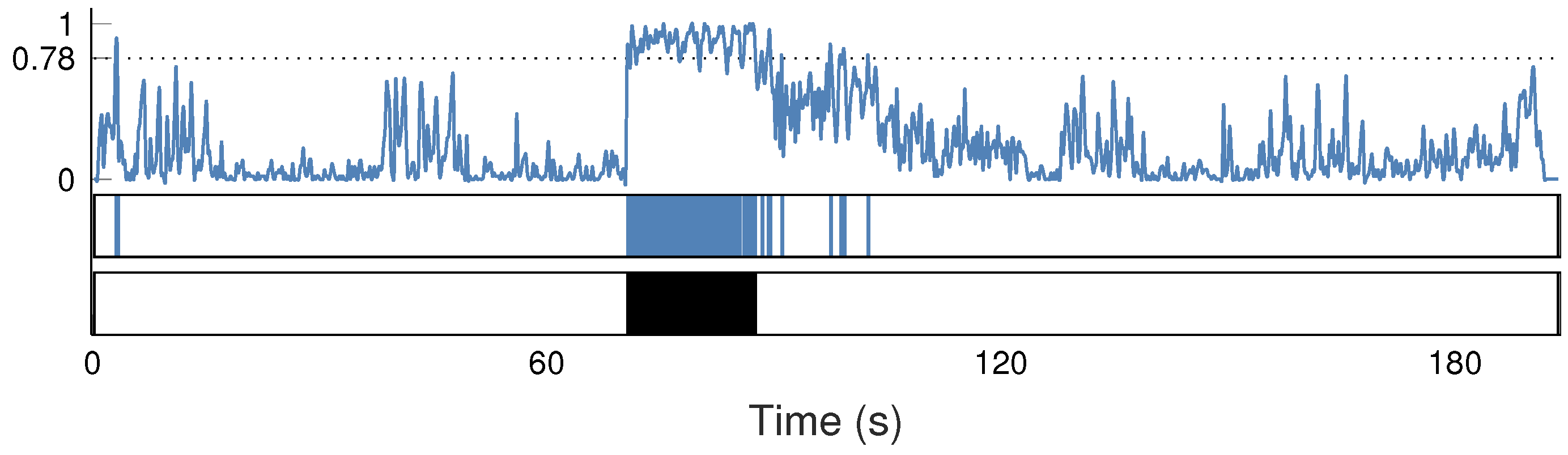

From top to bottom, the figure shows the decision function output by the RF (solid blue curve), the threshold value optimized during validation and testing (black dotted line), the frames estimated as percussions-only (blue rectangles), and the ground truth annotation (black rectangle). Focusing on the annotated segment (shortly after 60 s), we can see that the decision curve oscillates about the threshold, producing an inconsistent labeling. We attribute this behavior to eighth-note conga hits being played during the first beat of each bar in the break. These percussion sounds are pitched and appear relatively seldom in the dataset, leading to low decision scores.

The New Mastersounds - Dusty Groove [YouTube Link]

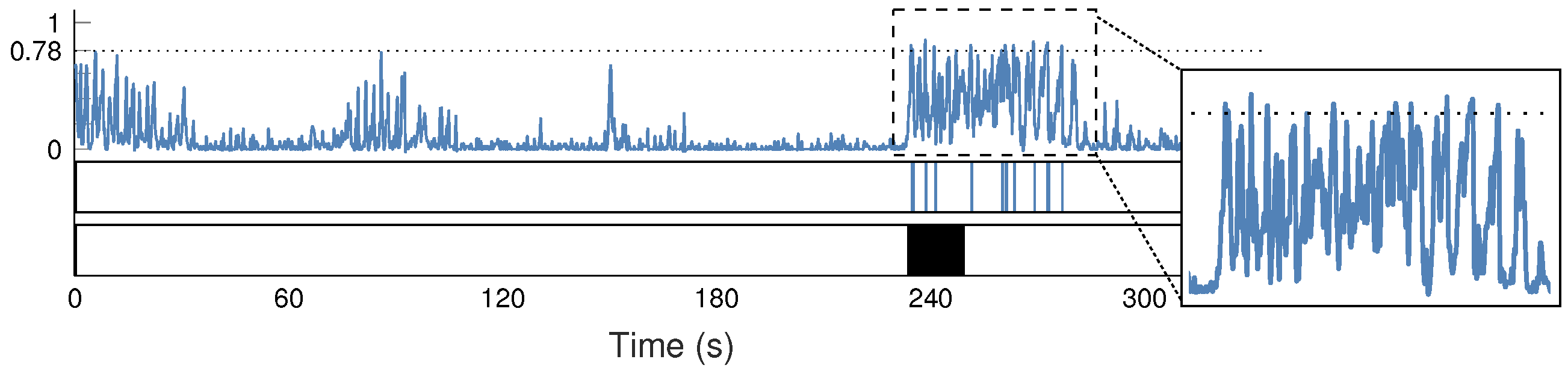

On this modern release from 2009, the production strives to replicate the "vintage" sound found on older recordings. Around the annotated region (240 s) we highlight two issues: during the break, there are few frames classified as percussions-only, and the decision curve maintains a relatively high mean value well after the break. Again, we ascribe the misdetection during the break to the presence of drum hits with strong tonal content. Especially the snare has a distinct sound that is overtone-rich and has a longer decay than usual, almost reminiscent of timbales. After the annotated break the percussions continue, but a bass guitar playing mostly sixteenth and syncopated, staccato eighth notes appears--upon closer inspection, we observed that the onsets of the bass guitar synchronize quite well with those of the bass drum. When played simultaneously, the spectral content of both the bass drum and bass guitar overlaps considerably, creating a hybrid sound closer to percussions than a pitched instrument.

Legal notice

The multimedia linked on this page are given for educational purposes only. If any legal problems occur, please contact us. Any content that allegedly infringes the copyright of a third party will be removed upon request by the copyright holder.