Towards Evaluating Multiple Predominant Melody Annotations in Jazz Recordings

Abstract

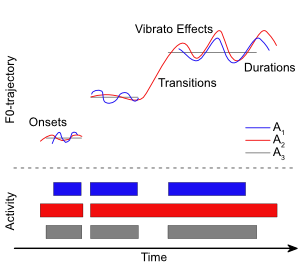

Melody estimation algorithms are typically evaluated by separately assessing the tasks of voice activity detection and fundamental frequency estimation. For both subtasks, computed results are typically compared to a single human reference annotation. This is problematic since different human experts may differ in how they specify a predominant melody, thus leading to a pool of equally valid reference annotations. In this paper, we address the problem of evaluating melody extraction algorithms within a jazz music scenario. Using four human and two automatically computed annotations, we discuss the limitations of standard evaluation measures and introduce an adaptation of Fleiss’ kappa that can better account for multiple reference annotations. Our experiments not only highlight the behavior of the different evaluation measures, but also give deeper insights into the melody extraction task.

Data Download

You can download the annotation data as a Zip-compressed file here.

Data Visualization

For the visualizations, we have resampled the data to a period of 100 ms and only show the annotations for human annotators.