Neural Directional Filtering - Far-Field Directivity Control with a Small Microphone Array

J. Wechsler, S. R. Chetupalli, M. M. Halimeh, O. Thiergart and E. A. P. Habets

Published in Proc. Intl. Workshop Acoust. Signal Enhancement (IWAENC), 2024.

Best Student Paper Award - 2nd Place

Click here for the paper.

Contents of this Page

- Abstract of the IWAENC 2024 paper

- Note to iOS users

- Audio Examples

- References

Abstract

Capturing audio signals with specific directivity patterns is essential in speech communication. This study presents a deep neural network (DNN)-based approach to directional filtering, alleviating the need for explicit signal models. More specifically, our proposed method uses a DNN to estimate a single-channel complex mask from the signals of a microphone array. This mask is then applied to a reference microphone to render a signal that exhibits a desired directivity pattern. We investigate the training dataset composition and its effect on the directivity realized by the DNN during inference. Using a relatively small DNN, the proposed method is found to approximate the desired directivity pattern closely. Additionally, it allows for the realization of higher-order directivity patterns using a small number of microphones, which is a difficult task for linear and parametric directional filtering.

Note to iOS users

In order to listen to the audio examples on this website, devices using iOS must not be in silent mode (as of 06 August 2024). We apologise for the inconvenience.

Audio Examples

Below, we illustrate the performance with some audio examples. We show both the performance of our baseline methods (Least-Squares Beamforming [1], Parametric Filtering [2]) and that of our proposed method.

The examples constitute mixtures of 2 speakers from LibriSpeech [3] that we spatialized. The models trained on a single speaker as well as a maximum of {3,5} speakers are evaluated. For all audio examples, we first give the cardioid target and the corresponding estimates, then the 3rd-order DMA target and the corresponding estimates.

The employed architecture is FT-JNF [4].

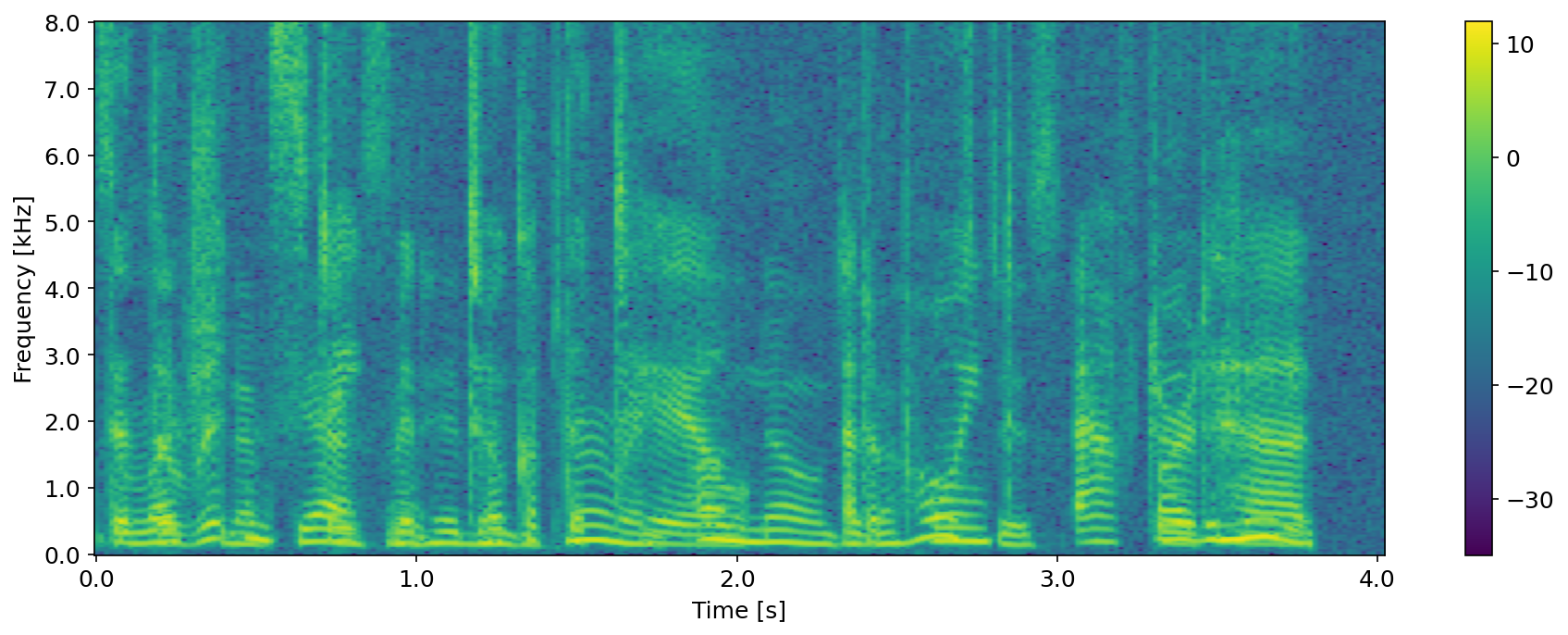

Sources at 2.5 and 87.5 degrees

The attenuation values are:

- Cardioid: -0.00 dB and -5.65 dB

- 3rd-Order DMA: -0.02 dB and -41.67 dB

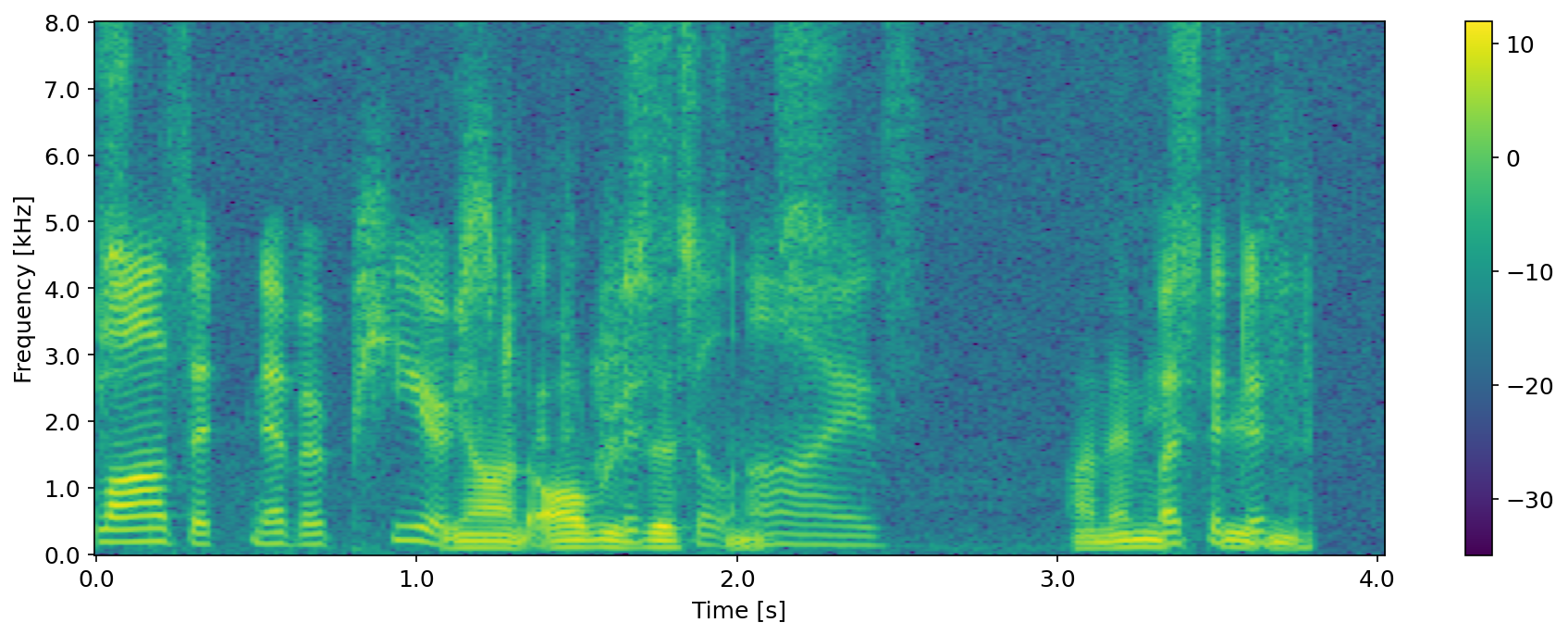

Sources at 177.5 and 187.5 degrees

The attenuation values are:

- Cardioid: -66.45 dB and -47.38 dB

- 3rd-Order DMA: -76.02 dB and -57.14 dB

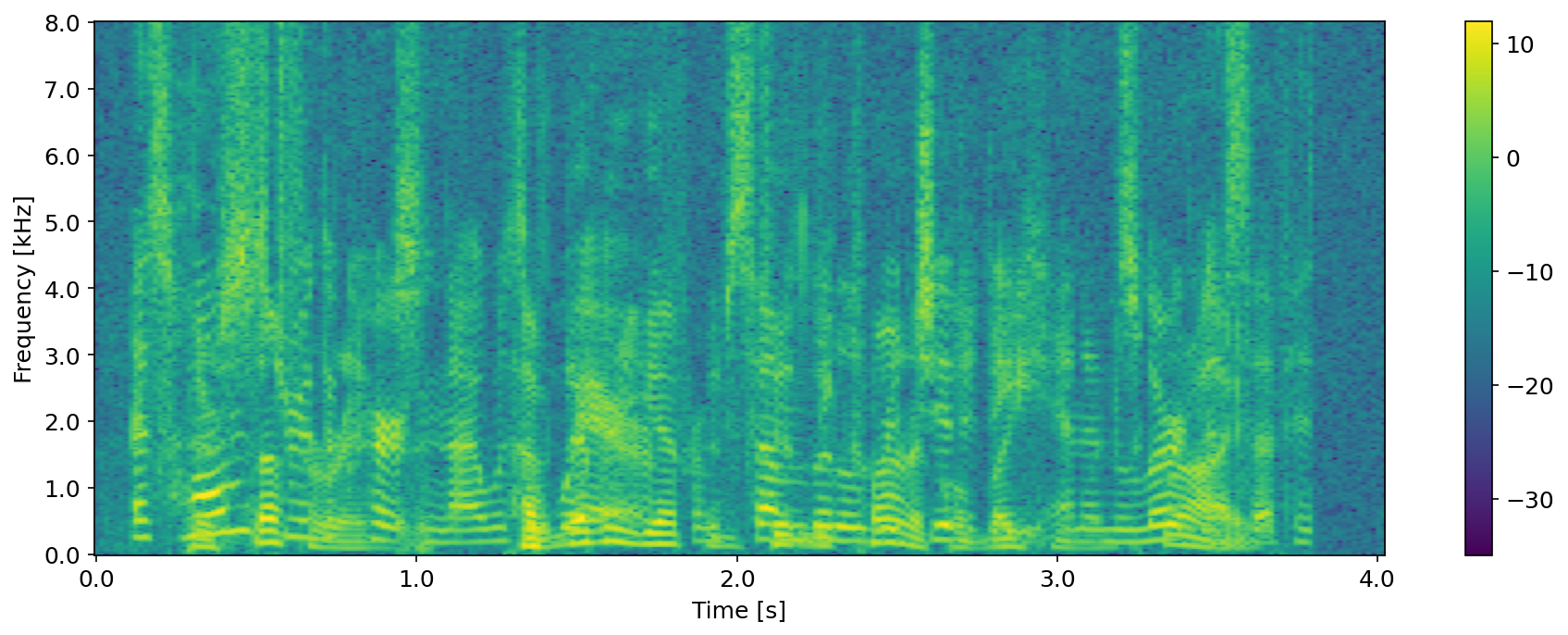

Sources at 92.5 and 182.5 degrees

The attenuation values are:

- Cardioid: -6.41 dB and -66.45 dB

- 3rd-Order DMA: -43.95 dB and -76.02 dB

References

[1] E. Rasumow et al., "Regularization Approaches for Synthesizing HRTF Directivity Patterns," IEEE/ACM Trans. Aud., Sp., Lang. Proc., vol. 24, no. 2, pp. 215-225, 2016.

[2] K. Kowalczyk, O. Thiergart, M. Taseska, G. Del Galdo, V. Pulkki and E. A. P. Habets, "Parametric Spatial Sound Processing: A flexible and efficient solution to sound scene acquisition, modification, and reproduction," in IEEE Signal Processing Magazine, vol. 32, no. 2, pp. 31-42, 2015.

[3] V. Panayotov, G. Chen, D. Povey and S. Khudanpur, “Librispeech: An ASR corpus based on public domain audio books,” in Proc. IEEE Intl. Conf. on Ac., Sp. and Sig. Proc. (ICASSP), 2015, pp. 5206-5210.

[4] K. Tesch and T. Gerkmann, “Spatially selective deep non-linear filters for speaker extraction,” in Proc. IEEE Intl. Conf. on Ac., Sp. and Sig. Proc. (ICASSP), 2023.