An Acoustical Zoom Based on Informed Spatial Filtering

O. Thiergart, Konrad Kowalczyk, and E.A.P. Habets

Published in the Proc. of the International Workshop on Acoustic Signal Enhancement (IWAENC), 2014.

This paper received the best student paper award at IWAENC 2014.

Abstract

In digital cameras, a visual zoom allows for narrowing the apparent angle of view in a video or an image. When audio signals complement the visuals, a desirable function would be to provide an acoustical zoom which is aligned with the visual image. In this paper, an approach is presented to achieve such a zoom effect using a microphone array. Acoustical zooming is realized by a weighted sum of the extracted direct sound and diffuse sound, whereas the weights depend on the zooming factor. In contrast to former approaches which are based on single-channel filters, the proposed approach used two recently proposed informed multi-channel filters for the extraction of the direct sound and diffuse sound. Simulation results verify the good performance even in difficult double-talk scenarios.

Description

Details of the simulation setup are described in [1]. A shoebox room (6 x 5 x 3.5m) was simulated. The sound was captured with an uniform linear array with M omnidirectional microphones. Two sound sources were located at different angles at distance g from the microphone array. Microphone self-noise was added to the microphone signals (21dB segSNR).

The acoustical zoom is achieved by a weighted sum of the extracted direct sound and reverberation, where the weights depend on the zooming factor. When zooming in, lateral sources move outwards in the acoustical image and the direct-to-reverberant ratio is increased. Moreover, sources are attenuated when mapped to positions outside the view angle of the camera.

The direct sound and reverberation are extracted using two recently proposed informed spatial filters [2,3]. When reproducing the sound, the reverberant signal is decorrelated for the two stereo channels to achieve a diffuse sound impression.

Listening Examples (Stereo)

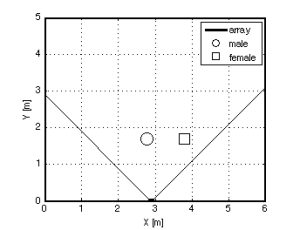

Example 1: female (right) and male speaker (center)

(RT60 = 270ms, M=6, r=3cm, g=1.7m)

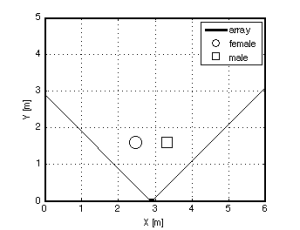

Example 2: female (right) and male speaker (left)

(RT60 = 310ms, M=5, r=3.2cm, g=1.6m)

Only direction-based panning No reproduction of diffuse sound No attenuation if outside the view angle of the camera

References

- O. Thiergart, Konrad Kowalczyk, and E.A.P. Habets, "An Acoustical Zoom Based on Informed Spatial Filtering," submitted to International Workshop on Acoustic Signal Enhancement (IWAENC), 2014

- O. Thiergart and E.A.P. Habets, "An informed LCMV filter based on multiple instantaneous direction-of-arrival estimates," in IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP) 2013.

- O. Thiergart and E.A.P. Habets, "Extracting reverberant sound using a linearly constrained minimum variance spatial filter," in IEEE Signal Processing Letters, vol. 21, no. 5, May 2014, pp. 630-634.